Storage virtualization can reduce costs, streamline IT operations, and increase infrastructure resilience. VMware’s vSAN software is one of the most popular storage virtualization platforms available today and provides administrators with a simple and robust solution for virtualizing storage in vCenter environments.

To help you get started with VMware shared storage, we’ll take a closer look at vSAN, how it works, and its key features.

What is vSAN?

vSAN is VMware’s Software-Defined Storage (SDS) solution first released in 2017.

SDS is a concept that attempts to encapsulate the functionality and features of a traditional storage area network and storage array without the often complex and expensive hardware. vSAN allows vSphere administrators to manage the storage needs of thousands of virtual machines by using the familiar vSphere client for configuration and management, with minimal training or storage experience.

vSAN leverages locally installed hard drives for storage capacity alongside ESXi software, which provides the functionality and logic. vSAN competes favorably against other SDS products such as Nutanix and Starwind Virtual SAN but boasts readily available premade hosts called vSAN Ready Nodes. vSAN is also the underlying technology in Dell’s popular turnkey solution VXRail.

With the release of vSAN 7 Update 3, administrators can even provision storage to traditional, non virtualized hosts via highly available NFS shares and iSCSI devices. With stretched-cluster, two-node, and ROBO (remote office/back office) deployment options, utilizing All-Flash or Hybrid modes, vSAN is proving to be incredibly flexible. In this article, we will cover the different deployment choices for vSAN and basic requirements and configuration options.

The table below details the differences between the different vSAN editions so that you can make an informed decision on which version is best for you.

| EDITION | Standard | Advanced | Enterprise | Enterprise Plus |

|---|---|---|---|---|

| Storage Policy-Based Management | * | * | * | * |

| Virtual distributed switch | * | * | * | * |

| Rack awareness | * | * | * | * |

| Software checksum | * | * | * | * |

| All-flash | * | * | * | * |

| iSCSI target service | * | * | * | * |

| IOPS limiting | * | * | * | * |

| Cloud-native storage | * | * | * | * |

| Container storage interface (CSI) | * | * | * | * |

| Shared witness | * | * | * | * |

| Deduplication and compression | * | * | * | |

| RAID 5/6 | * | * | * | |

| vROPs within vCenter | * | * | * | |

| Encryption | * | * | ||

| Stretched cluster | * | * | ||

| File services | * | * | ||

| HCI mesh | * | * | ||

| Data persistence platform | * | * |

VMware vSAN: Hybrid vs. All-flash

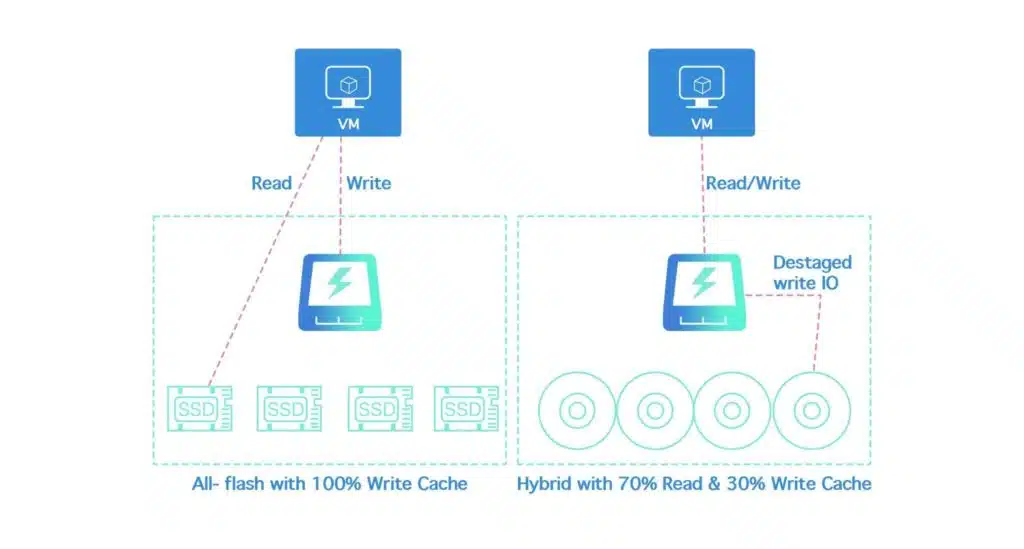

vSAN has two modes administrations can select, “All-flash” or “Hybrid”.

Hybrid mode entails using SSD devices as cache and mechanical hard disk drives (HDDs) for persistent storage. It was the only option when vSAN was initially released. The faster SSD device services VM reads and writes in real-time. Then, vSAN logic eventually destages the data from the cache and onto the mechanical disk for long-term storage. This Hybrid approach is more economical because it leverages cheap HDDs for persistent storage but has performance tradeoffs.

All-flash mode uses SSD disks for both the caching tier and persistence tier. As a result, it significantly improves performance but increases hardware costs.

With Hybrid mode, cache capacity is split 70% for reads and 30% for writes. This 70/30 split is because read-cache performs better if more cache is available, and writes are regularly destaged to the persistent storage anyway. As a rule of thumb, the total cache for Hybrid mode should equal to 10% of vSAN’s used capacity, not total capacity.

Conversely, because flash-based disks have an inherent reliability problem, cache is used as a 100% write tier for All-flash mode. The electrically charged cells that store data can only be written a certain number of times before they fail. By fronting all of the writes with highly write-endurant disks, we can absorb most of them before destaging them to cheaper, capacity-based SSD. This approach leads to significant cost savings because all reads are immediately directed to the persistence tier with no penalty.

Additionally, All-flash mode supports RAID5 and RAID6 for VMs, as well as compression and deduplication. These features add additional I/O overhead and cannot be supported with Hybrid mode. The general recommendation is to go with All-flash if you can as it offers better performance, more features, and future-proofs your environment.

vSAN host requirements

vSAN has a strict set of hardware requirements that extends to the driver level. If you build a cluster yourself, you should check all hardware against VMware’s hardware compatibility guide. To simplify the hardware selection process, vSAN ready-nodes are also available ‘off the shelf’ with fully supported hardware and a guaranteed rate of IOPS.

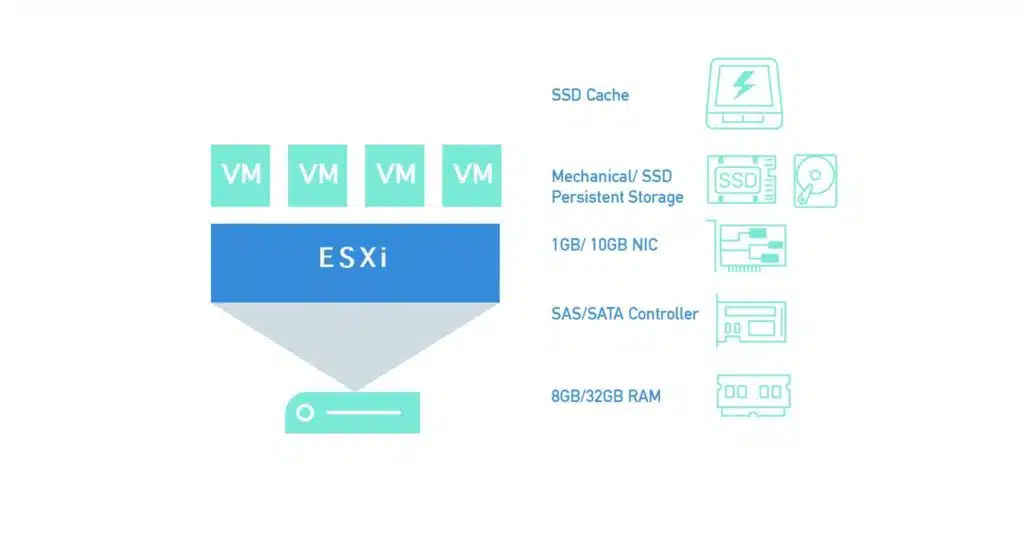

You can run vSAN across two ESXi hosts in special cases, but you will need at least three hosts for all other deployments. In general, four hosts are recommended for maintenance purposes. If you decide to select your hardware, each host will need:

- SSD Cache: Minimum of one supported SSD for cache

- Persistent Storage: Minimum of one HDD or SSD for storage

- NIC: 1GB (Hybrid) or 10GB (All-flash)

- SAS/SATA Controller: Must run in passthrough mode or RAID0 for each disk

- Memory: 8-32 GB of RAM depending on the number of disks and disk groups

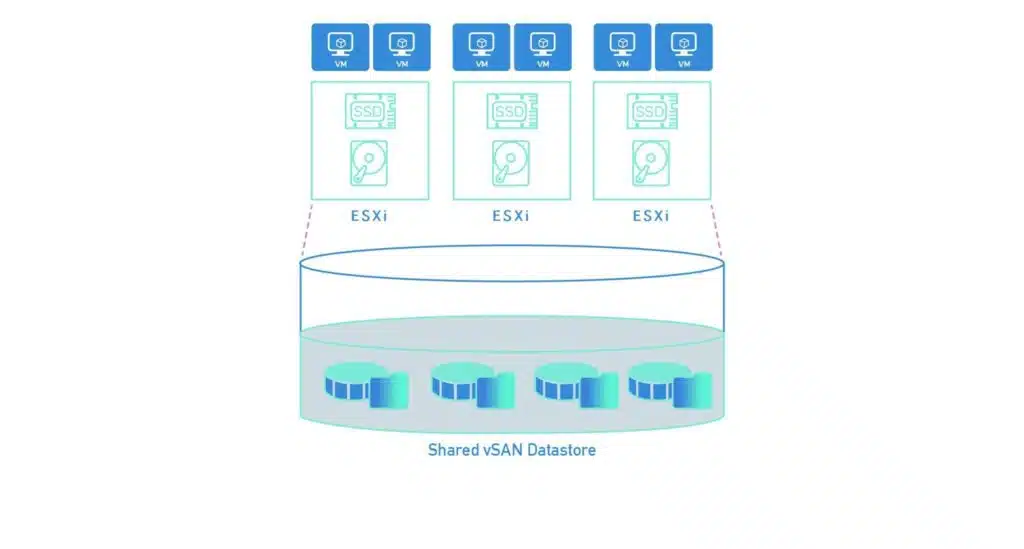

The vSAN Datastore

vSAN combines the persistent disks in your hosts over a vSAN network to create a single shared datastore. Each ESXi host has bont and server software preinstalled, allowing Virtual Machines to access their disks without any special configuration. It also means that VMs do not need to run on the same hosts where their files are physically stored. The shared storage enables features such as vMotion, DRS, and HA to function, without a traditional SAN.

To an administrator, the vSAN datastore looks and behaves like any other datastore, except for one big difference: it is object-based!

Object-Based Storage

Traditional file systems are limited in many ways. Because they have a set number of bits to address files and directories, there are limits to how many they can store. Furthermore, file systems generally describe files in fairly mundane ways, including details like file name, permissions, and creation date.

Object-based storage addresses these shortcomings by effectively removing the file system itself. It treats files as a series of ones and zeroes, storing them in a single ‘bucket’ of storage. Objects are stored and retrieved not via an address but with a unique identifier.

Each object has an associated descriptor, typically XML, which adds custom metadata to the object. For example, consider a healthcare system that stores data such as X-Ray images. With object-based storage, all the relevant metadata — such as patient name, insurance number, doctor, blood type, and bed location — can be stored as XML data

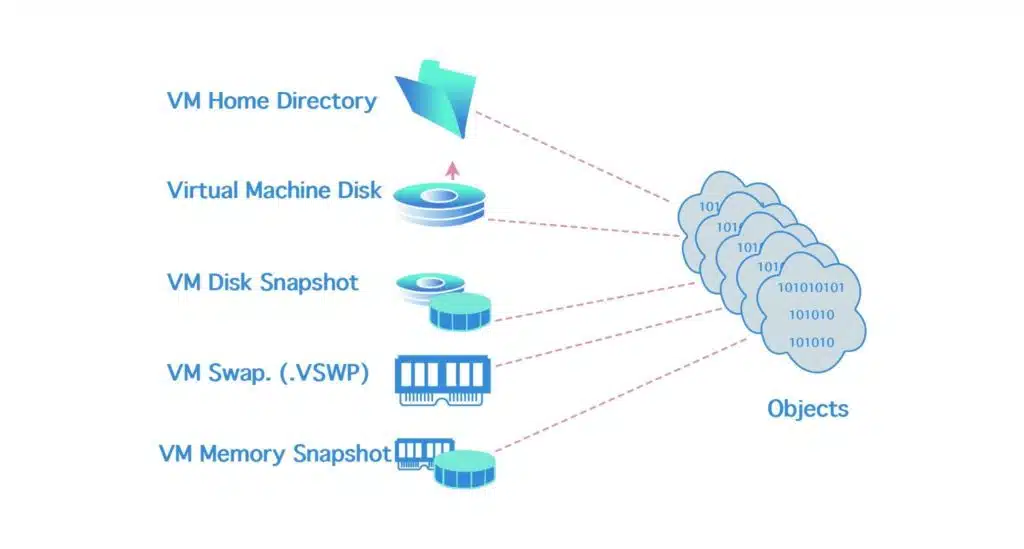

vSAN uses object-based storage and breaks the virtual machines into several objects.

Descriptive metadata defines storage policies applied to the objects and dictates how they are protected and their performance levels. A lightweight variant of VMFS called VSAN-FS is overlaid on the vSAN Datastore to enable features like HA (which requires access to specific files).

vSAN breaks virtual machine files into the following objects:

- Home Space: Includes the VM directory, VM log, and NVRAM files.

- VMDK: An object created for each virtual disk.

- Snapshot: An object created for each disk snapshot.

- Swap: An object for the VSWP disk.

- Memory Snapshot: An object for each memory snapshot.

“The CloudBolt team has been with us on this journey to self-service… This level of partnership and shared direction has enabled Home Depot to move faster, move further and continuously enhance our offerings to our Development Team customers.”

– Kevin Priest, The Home Depot

vSAN Networking

Like any other shared storage protocol, vSAN needs network access. In our example below, an Ethernet network is used. The network is configured using a VMkernel port on a standard or distributed switch.

When you purchase a vSAN license, you gain access to a distributed switch without the need for a vSphere Enterprise Plus license. You will need a dedicated 1GB network if running Hybrid Mode (to be discussed later) or a 10GB network for All-flash mode.

In All-flash mode configurations, the 10GB vSAN network can be shared with other traffic types such as VMotion. The vSAN network can go over L2 or L3 as needed. However, keep in mind that vSAN traffic is not load-balanced over multiple NICs. NIC Teaming is used for redundancy and failover.

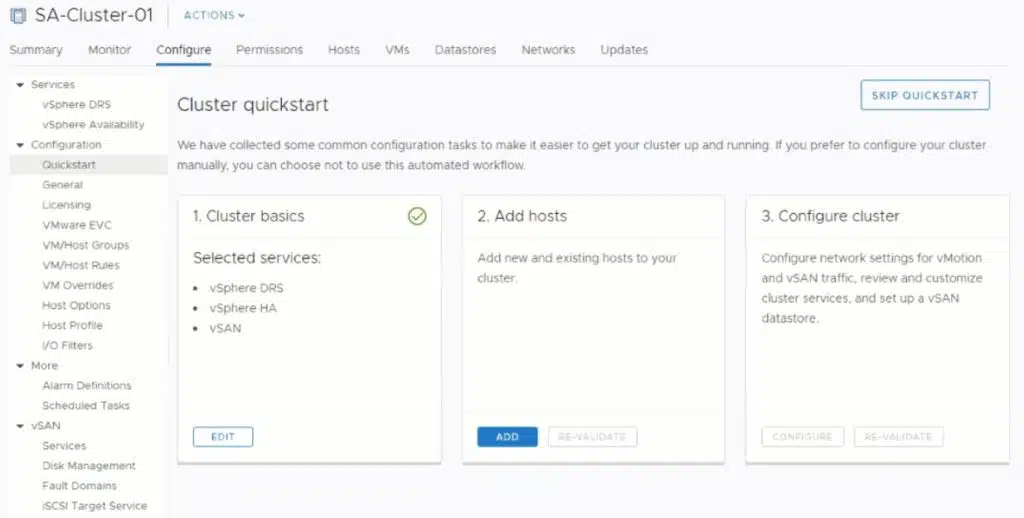

Enabling vSAN

You can enable vSAN on a pre-existing cluster by manually configuring the vSAN network and then going through a vSAN wizard. Alternatively, vSphere clusters now have a ‘Cluster Quickstart’ wizard that steps you through adding hosts to a cluster, enabling HA, DRS, and vSAN and then setting up distributed switch networking. The ‘Cluster Quickstart’ wizard is by far the best method, with the automated network configuration being particularly useful.

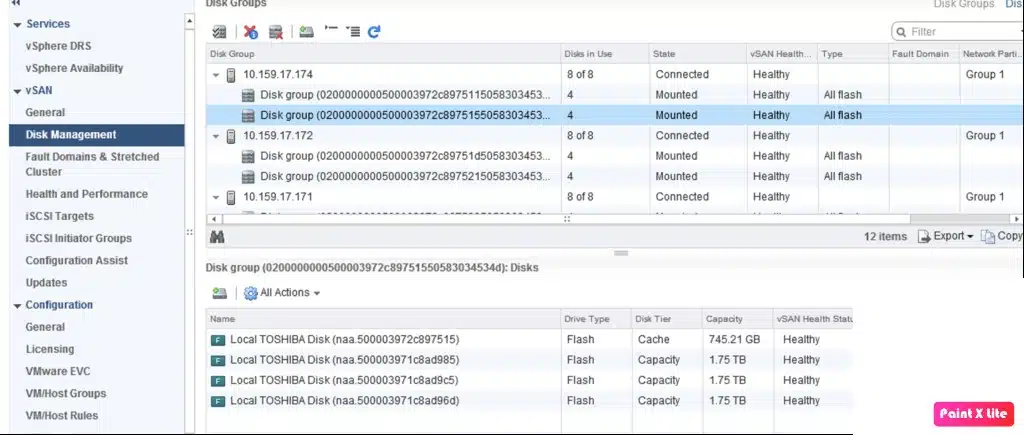

Disk Groups

One of the more important aspects of the vSAN wizard is the creation of disk groups. Each host must have at least one disk group. By placing physical disks into them, we make each disk group available for vSAN to consume.

Disk Groups will be either Hybrid or All-flash; they cannot be both.

Each group must contain:

- Exactly one SSD for cache

- At least one additional disk (SSD or HHD) for persistent storage, with a maximum of seven additional disks total

We can create up to five disk groups per host. The physical disks can be either internal storage or external disks directly connected to the host.

The choice of using one or multiple disk groups is a matter of performance, capacity, and availability. As each group only contains one cache disk, it is essentially a single point of failure (although cached data is always replicated to other hosts). A cache failure will remove the entire disk group from use until it is replaced, so it is better to have more than one. Additional disk groups also mean we have more aggregate cache and thus better performance. vSAN best practice is to have at least two disk groups, each managed by a separate disk controller.

Virtual Machine Storage Policies

Virtual machines placed into a vSAN Datastore automatically inherit a “Default Storage Policy” that dictates how its objects are protected and distributed across the vSAN cluster. You can edit or replace the default policy at the VM level.

The default policy uses mirroring to protect VM objects against a single failure and has no performance-related options set. Different policies can also be applied to different disks of the same VM.

Policies contain many options, so we will cover the most important you need to know below, along with an example in the following section.

- Failure tolerance method: How objects are protected. Options are Mirroring or RAID.

- Primary failures to tolerate (PFTT): Number of host/device failures to tolerate.

- Number of disk stripes per object: How many hard drives objects are spread across.

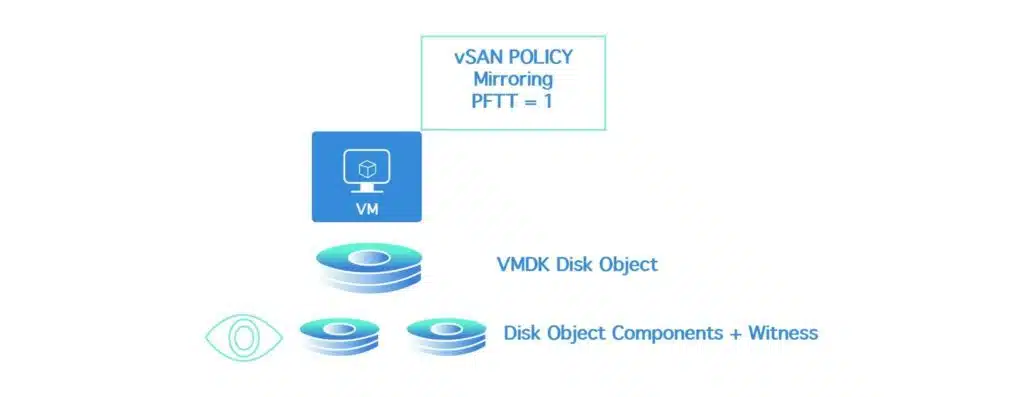

Now, let’s review some important terminology and concepts. As we know, the original virtual machine files are converted into ‘objects.’ A VMDK is an object, a swap file is an object, and so on. When an object is mirrored, or broken down into RAID segments, those “sub-objects” are referred to as “components”. As a result, a single VMDK object that is protected by a mirror is broken down into two components, each a mirror of the other. In reality, mirrored objects are also composed of another type of component called a “witness component”.

The witness component — which is only a few MBs in size and contains no VM data — is designed to act as a tie-breaker in the event of a network partition. The VM will fail over to an ESXi host in the partition containing the most components. As a result, the number of components that any object has must be an odd number and the witness component helps with this.

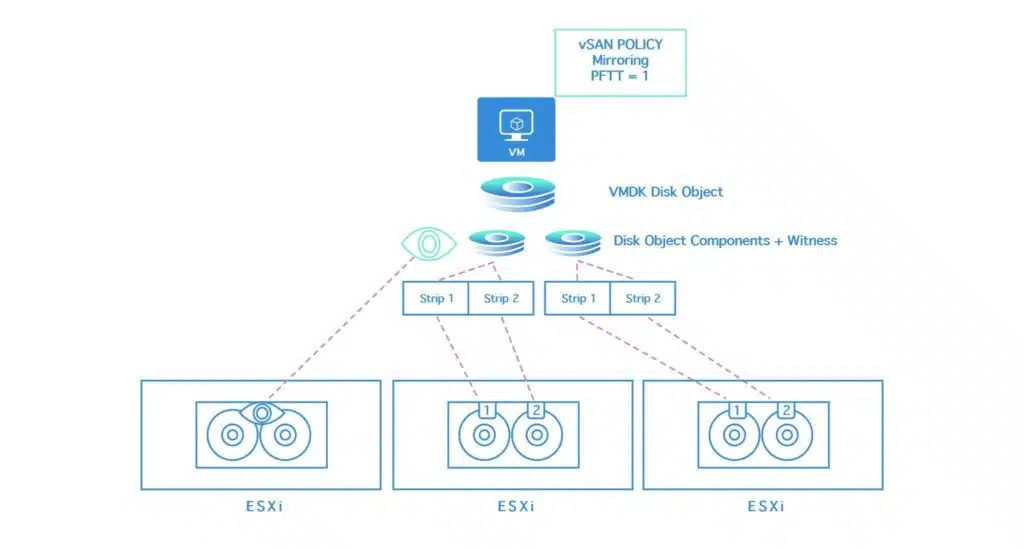

vSAN Example: VM with Mirroring, PFFT set to 1 and Disk Stripes to 2

Let’s assume we configure vSAN settings of:

- Failure tolerance method: Mirroring

- Primary failures to tolerate (PFTT): 1

- Number of disk stripes per object: 2

With these options set, the virtual machine’s VMDK object will be broken down into three components consisting of two mirrors and one witness.

These objects will be distributed across three hosts for availability. Component placement is fully automated and not configurable. The two mirror components will now be broken down further into stripes and written across two different, randomly selected disks.

The striping breaks the component down into 1MB chunks or ‘strips,’ with each strip going across a different disk. The witness object will not be striped because it would provide no benefit as it is infrequently accessed. This behavior also explains why vSAN typically requires a minimum of three hosts.

Logically, because the striping distributes the component over two disks, it should perform better than a single disk. However, that isn’t always the case. Because the vSAN logic will automatically handle the striping and may stripe across disks within the same disk group, striping may not lead to a performance boost in some cases.

For example, because the same cache SSD fronts all the disks within a disk group, striping provides no practical improvement. You can work around this by setting the number of stripes greater than the number of persistent disks in a disk group. 12 is the maximum.

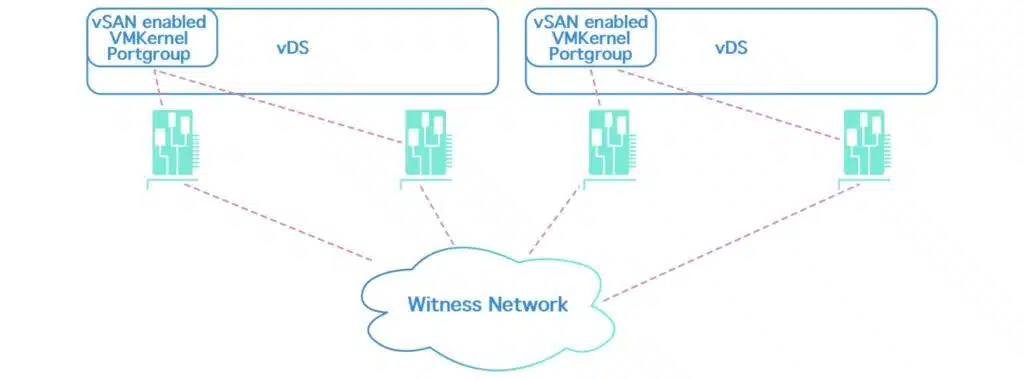

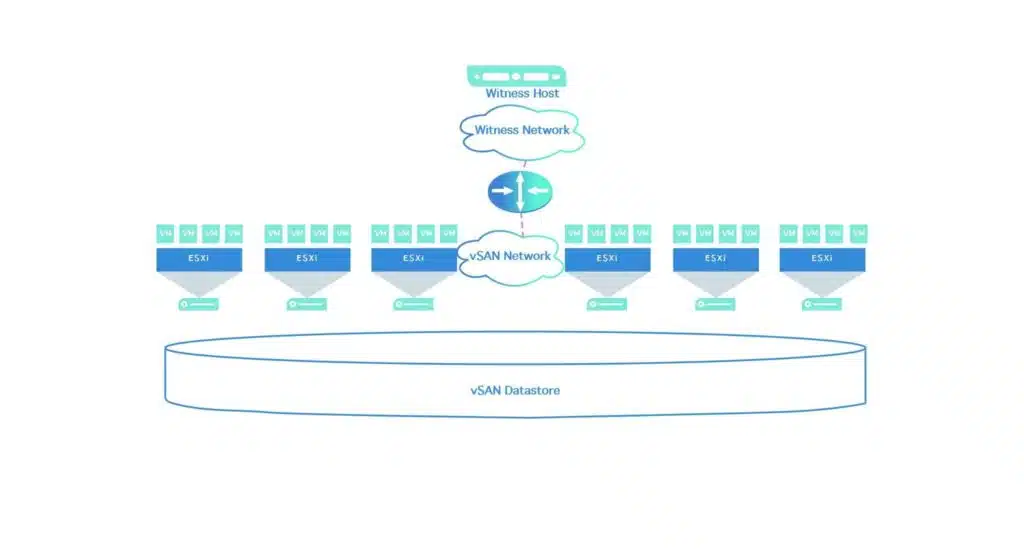

Stretched Cluster

With the vSAN Stretched Cluster option, you can spread up to 30 ESXi hosts across two physical locations. Virtual machines can be located at either site and are mirrored to the other.

A layer two or layer three network can connect the sites. Layer two networking is simpler to manage. The network needs to be low-latency as round-trip time (RTT) must be under 5ms. Generally, this makes Stretched Cluster configurations viable across campuses or metropolitan areas, but not larger geographical distances.

In the Stretched Cluster topology, VMs can migrate using vMotion and tolerate an entire site failure without the need for any replication hardware or orchestration software. vSAN mirroring and vSphere HA handle the whole failover process in a matter of minutes.

Stretched Cluster configurations require a third site to act as a witness site to ensure that failover occurs correctly.

ROBO/Two Node Cluster

Although vSAN typically requires a minimum of three hosts, a special ROBO (remote office/back office) deployment option is available for small environments such as remote locations and back offices.

With the ROBO option, you can deploy two ESXi hosts and mirror VMs between them. While the third host is still technically required for the vSAN logic to function, it can be a virtual machine running a special version of ESXi. The third node, or witness host, runs at the corporate head office or on a cloud platform such as AWS. You don’t even need a physical switch for the vSAN network; you can use a crossover cable between the two hosts!

This topology provides small locations with enterprise-grade shared storage and features such as vMotion, DRS, and HA that are prohibitively expensive with traditional storage.

Conclusion

As we can see, vSAN is a highly flexible solution that organizations can leverage for a variety of use cases. It provides vSphere administrators with a scalable storage technology they can control and manage directly, without requiring a dedicated storage team or specialized hardware.

With All-Flash and Gigabit networking, it can compete with traditional Storage Area Networks and yet provide individual virtual machines with tailored policies. Furthermore, with features such as encryption, NFS, iSCSI, Stretched Clustering, horizontal scaling, and the ability to extend vSAN clusters together, it is a compelling alternative to traditional storage.

Related Blogs

The End of Manual Optimization: Why We Acquired StormForge

Today is a big day for CloudBolt—we’ve officially announced our acquisition of StormForge. This marks a major milestone for us…