The traditional method of allocating storage to a server, known as thick provisioning, often leads to overprovisioning and waste. With thick provisioning, space is fully allocated to a device and subtracted from the total space available.

For example, if we create a 50GB storage device, on an array with a capacity of 150GB, there will be 100GB left. What if the host only uses 1GB out of its allocated 50GB? 49GB is now wasted. That waste can add up fast in a world where virtualization is the norm.

Thin provisioning solves this problem by enabling flexible virtual storage allocation. Effectively using VMware thin provisioning can help you optimize storage in VMware environments. However, VMware storage provisioning has some nuance to it, so it’s important to understand the different methods of allocation and their tradeoffs.

Here, to help you hit the ground running with VMware thin provisioning, we’ll explore it and other storage allocation methods in-depth. Let’s get started with a closer look at the history of thin provisioning.

What is thin provisioning?

Thin provisioning, sometimes called virtual provisioning, is a method of efficiently allocating storage resources by presenting a right-sized virtual storage device to a host.

Thin provisioning was originally developed by Data Core in 2002. However, 3PAR, a storage company later acquired by Dell, is credited with coining the “thin provisioning” term we use today.

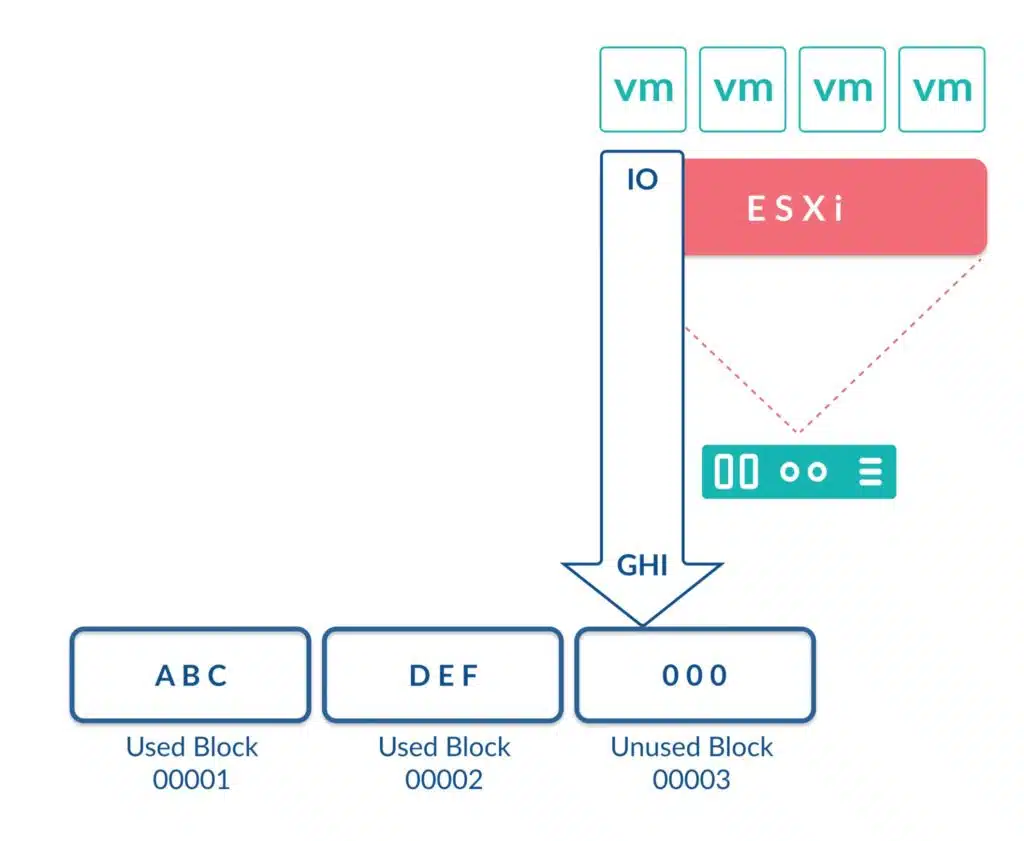

Thin provisioning (a.k.a. virtual provisioning) is the process of presenting a storage device to a host that only allocates the space the host requires. The allocated capacity is actually empty space consisting of pointers in memory that do not consume disk space until they are physically written.

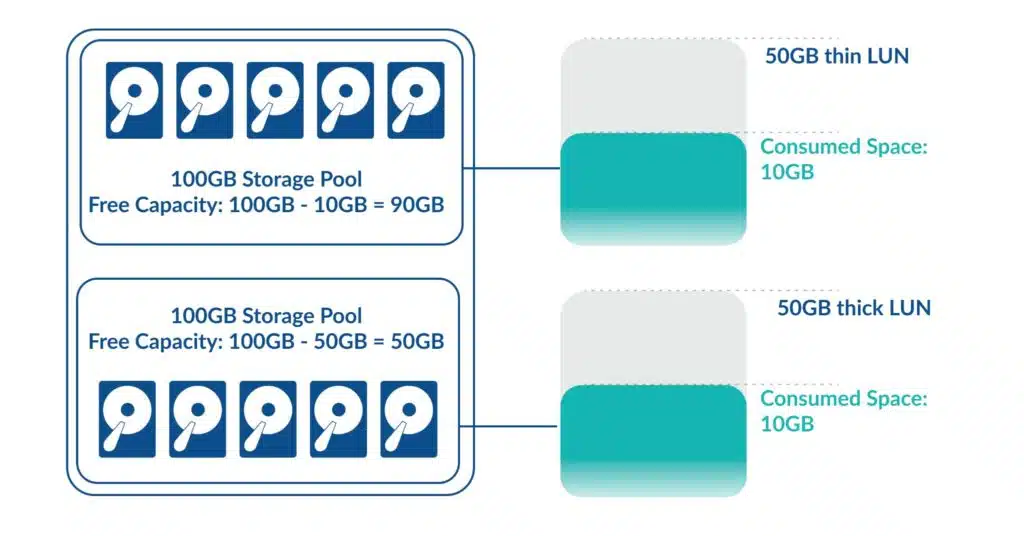

For example, when presenting a 50GB device to a host, the true size of that device is effectively zero. Capacity is only consumed as the host writes to the device. Once the writes occur, disk blocks are allocated and consumed. Therefore, with thin provisioning a 50GB device, with 10GB of data written to it, only consumes 10GB of physical disk space.

When working with thin provisioning, it is important to remember that the host is unaware if a LUN is thick or thin. Hosts simply see and use storage regardless of the underlying provisioning method.

Image shows the difference between Thin and Thick Provisioning. The Thin device consumes 10 GB of space, leaving the storage pool with 90BG of free allocatable capacity. The traditionally provisioned device consumes all space up front, including unused space.

VMware VM Disk Provisioning

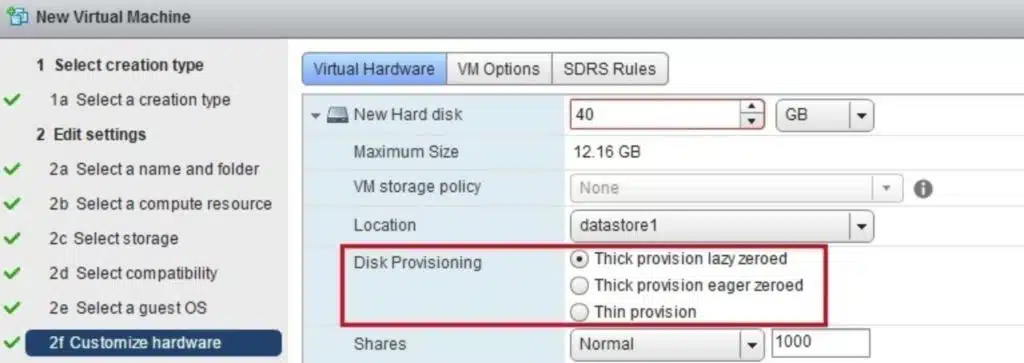

VMware vSphere and vSAN can use thin provisioning when allocating storage. When using the ‘Create New Virtual Machine’ wizard, we have three options for provisioning the virtual machine’s (VM’s) into a vSphere managed Datastore. These options are “LazyZeroedThick” ( default), “EagerZeroedThick”, and “Thin”. Administrators must be familiar with these options and their use cases to allocate storage effectively.

Note that you can use VMware Thin Provisioning with storage array-based thin provisioning. However, you should consider the tradeoffs, which we’ll discuss later in this article before you do.

Each of these options has characteristics that may be useful depending on your requirements for performance, manageability, and creation times. Below is a table that summarizes the differences.

| LazyZeroedThick | EagerZeroedThick | Thin | |

|---|---|---|---|

| Creation Time | Fast | Slow | Fastest |

| Allocation | Fully preallocated | Fully preallocated | Allocated on demand |

| Disk Layout | High chance of being contiguous | High chance of being contiguous | Lower chance of being contiguous |

| Zeroing | First write | Fully at creation | At allocation |

Now that we understand the high-level differences let’s dive into the details of each method.

Lazy Zeroed Thick (LZT)

Lazy Zeroed Thick (LZT) is the default disk provisioning method. The space is allocated within the VMFS file system and the storage array itself when a virtual disk is created. Despite this, the blocks are not yet zeroed out to delete any old underlying data on the LUN. Zeroing out is done later.

With LZT, creation time is minimal as we don’t have to waste time writing many zeros across the network and into the LUN. As a result, SAN and disk I/O are also reduced.

However, there are tradeoffs with LZT. When a VM issues a write for the first time, it is buffered in memory until the hypervisor can zero out the entire VMFS block. This buffering substantially reduces the performance for the first write. When the VM writes to the block a second time, the zeroing operation does not occur, so the delay goes away.

“The CloudBolt team has been with us on this journey to self-service… This level of partnership and shared direction has enabled Home Depot to move faster, move further and continuously enhance our offerings to our Development Team customers.”

– Kevin Priest, The Home Depot

Lastly, let’s dispel a common LZT myth. Due to disk layout, many believe that LZT and EZT disks provide better performance. Because LZT and EZT fully allocate blocks, the blocks should be contiguous. Contiguous blocks are physically adjacent to each other. The contiguous layout could perform better for sequential I/O but generally doesn’t.

The performance boost doesn’t generally happen in practice because we typically have multiple VMs on a datastore. When several VMs issue I/O, any sequential I/O has been merged to become random when it arrives at the array. Further, arrays typically absorb writes into cache anyway before destaging to disk.

Lazy Zeroed Thick (LZT) summary

PROS:

- Fast to create

- Performance will equal EZT eventually

CONS:

- Slow performance for the first write

- Not thin provisioned so upfront storage costs may be more expensive

RECOMMENDATION: Consider using LZT if the provisioning speed is a top priority.

It is the default option, but it doesn’t offer many advantages unless provisioning speeds are important.

Eager Zeroed Thick (EZT)

With Eager Zeroed Thick (EZT), the blocks that make up the VM’s disk on the datastore are fully zeroed out during the creation process. This means that the provisioning time is slower, proportional to the size of the disk.

That said, VMware’s use of a storage primitive known as “Write Same” significantly reduces the delay. With Write Same, which is implemented via the vSphere API for Array Integration (VAAI), the ESXi host does not have to zero out each block explicitly. Instead, it offloads the process to the array itself, which can complete the task much faster than ESXi.

Furthermore, if the underlying disks are SSD, zeros do not have to be physically written to each block by the array. This means that while Eager Zeroed Thick disks are technically slower to provision, in practice, the differences are not necessarily that great, particularly on all-flash arrays.

Because the VMDK file is fully zeroed and allocated upfront, there is no delay when a VM sends a write for the first time to a block. The fast first write times are why many software vendors insist that users deploy their products using EZT disks.

Additionally, Eager Zeroed Disks can have another hidden advantage. While they are technically fully provisioned, because the blocks are completely zeroed, storage arrays that provide deduplication and compression features can typically reduce actual space consumption quite dramatically.

Eager Zeroed Thick (EZT) summary

PROS:

- Best possible performance for the first write

- Zeroed out blocks with EZT lend themselves to deduplication and compression

CONS:

- Can be slower to create

- Not thin provisioned, so upfront storage costs may be higher.

RECOMMENDATION: EZT is a good choice for you if performance is a top priority and storage consumption and slower provisioning times are not an issue. Additionally, EZT Is often mandatory for specific workloads like databases. EZT is highly recommended on all-flash arrays.

VMware Thin Provisioning

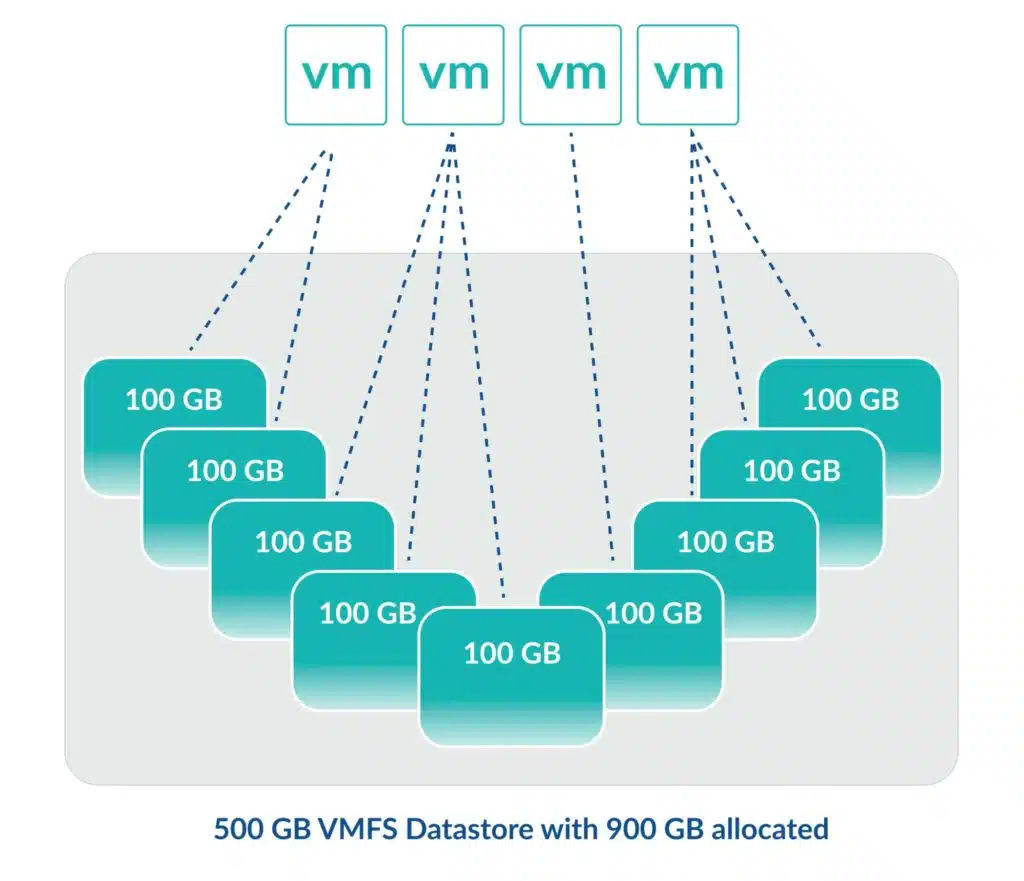

VMware thin provisioning is similar to array-based thin provisioning. The ESXi hypervisor can allocate a disk to a virtual machine without having or allocating the space upfront. As a result, administrators can create a virtual disk of almost any size (up to an imposed 62TB limit) without possessing that capacity physically.

This functionality can prove convenient if we are not sure what size disk a VM requires or if we must quickly provision virtual machines without yet owning the storage capacity for them. Further, a hypervisor can allocate VMs more storage space than is physically available.

With regards to performance, thin disks have the same performance profile as Lazy Zeroed Thick. At provisioning, blocks are not pre-zeroed, leading to fast creation times. When a write is issued for the first time into a fresh block, the write must be buffered and the block zeroed, leading to initial latency.

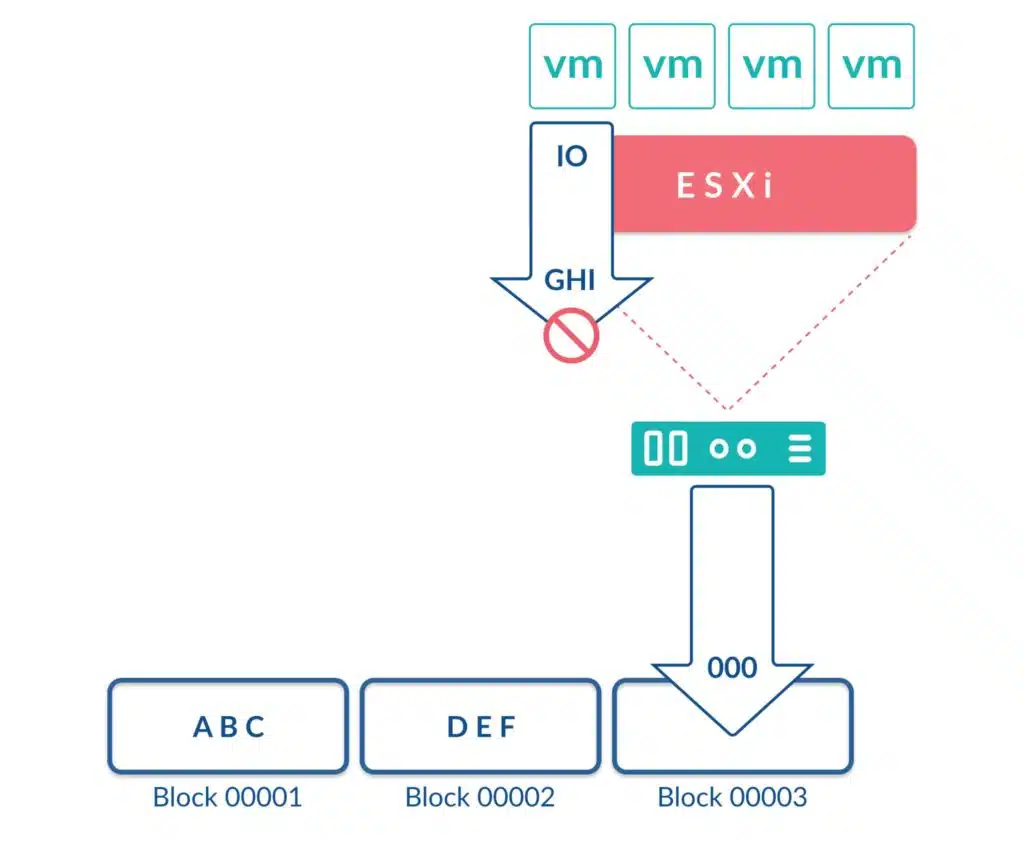

Care must be taken when managing thin disks because they can easily run out of physical capacity, particularly when overallocation of storage is used. When this happens, the hypervisor will intercept the “Out of Space” error sent by the storage. This is to prevent the VM guest operating system from receiving it which would crash the application. To stop further writes, the hypervisor will suspend the VM, giving administrators time to fix the issue by increasing the datastore capacity or migrating the machine.

VMware Thin Provisioning summary

PROS:

- Minimizes storage consumption

- Minimizes storage costs

CONS:

- Slow performance for the first write

- Management overhead required to ensure out of space conditions do not occur, particularly if used in combination with a thin LUN.

RECOMMENDATION: It is best practice to use VMware thin provisioning if storage capacity is at a premium or if the storage array cannot thin provision. Be sure to check with your storage vendor to verify if VAAI for Thin Provisioning primitives are available.

VMDK Thin Provisioning vs Array-Based Thin Provisioning

Both storage arrays and ESXi can provide thin provisioning capabilities. In fact, VMware fully supports the use of thin provisioning at the array level for VMs.

Interestingly, we can even combine the two, having Thin VMDK disks on Thin LUNs. However, it is not recommended as it increases the chances of running out of space at the array or datastore level (or both). If thin provisioning is required, it should be used in one location or the other.

Here is a breakdown of the different array and VMDK provisioning options.

| VMDK Thin | VMDK Thick | |

|---|---|---|

| Thin Array Provisioning | Risky. Not recommended! We can run out of space at the array level or the datastore. | Some risk. We can run out of space if array capacity is overallocated and poorly managed. |

| Thick Array Provisioning | Some risk. If the datastore is overallocated and poorly managed, we can run out of space. | Safest. All storage capacity is allocated upfront. Can be expensive but costs can be minimized with compression and deduplication. |

When using thin provisioning at the storage array layer, most storage arrays will utilize a thin provisioning primitive packaged with VAAI. ESXi cannot natively tell if a LUN is thin provisioned. This means that ESXi could easily deploy more, or larger, machines into the thin datastore than is safe.

With VAAI, a storage administrator can create alarms within the array to notify when the storage pool is running out of capacity or if space has been overallocated to a risky degree. VAAI can then advertise these alerts to vCenter when the thresholds are breached, giving vSphere administrators a chance to proactively react and manage their capacity.

Conclusion

VM disk provisioning options are more complex than they first appear. Before making a decision, you need to do your homework on performance, deployment speed, management overhead, cost factors, risk, array technologies, and hard drive types.

However, storage technologies and supporting factors such as VAAI have come a long way since VMware selected Lazy Zeroed Thick as the default disk option. EZT drives can compete favorably with LZT deployment times when supported by VAAI and SSD.

Furthermore, EZT can also compete with thin provisioned disks for space efficiency when backed by deduplication and compression technologies. While EZT may come out as a good choice, it is always best to check with your storage vendor for their recommendation.

Also, don’t forget, that while it is possible to thin provision from your array and at the VM level, it is not a good idea to thin provision both. If you want to offload the management of thin provisioning to the array and storage team, while maintaining insight via VAAI, do it at the array level. If you want to control and manage capacity within the vSphere team, go for VM thin provisioning.

Related Blogs

The End of Manual Optimization: Why We Acquired StormForge

Today is a big day for CloudBolt—we’ve officially announced our acquisition of StormForge. This marks a major milestone for us…