Load balancing is one of the most important aspects of optimizing performance across various applications and tech stacks. In VMware environments, NSX load balancers enable load balancing across several protocols on-prem and in the cloud.

Fundamentally, load balancing is a simple concept: you distribute work across multiple nodes. But, when you layer in the technical complexities of large-scale virtualized deployments, it can be intimidating to learn. We’ll explain the basics of load balancing in this article and take a deep dive on VMware NSX load balancers.

The problems load balancing solves

We’ve already touched on the basic problem load balancing solves. Once a system reaches a certain size, individual servers and network appliances can’t handle the entire load without performance degradation. Load balancers spread the load across multiple devices to prevent this.

However, that’s not the only benefit load balancers provide. Three other key upsides of load balancers are:

- High availability and fault tolerance – Even if your servers are big enough to support the entire workload, there is a chance they could fail. Load balancing helps mitigate the risk by spreading requests across multiple devices.

- Flexibility and control – When a load balancer sits between your clients and servers, you can perform maintenance on backend components without downtime. Additionally, modern load balancers allow you to implement rules that shape traffic flows.

What is NSX?

In recent years, VMware has become a leader in the software-defined networking (SDN) space. This began in the early 2010s, first with the introduction of VMware vShield, and the acquisition of Nicira. These two products eventually became known as VMware NSX, which is now the cornerstone of VMware’s SDN offering. NSX has evolved over the years into a unified code stream known as “NSX-T”. It offers multi-hypervisor support and integrations with various platforms, including AWS, Azure, and vSphere.

One of the core features of NSX-T is load balancing.

NSX – Load balancing features

NSX-T has five license tiers with different load balancing features. The table below details the load balancing features different NSX-T license tiers support.

| Features | Standard | Professional | Advanced | Enterprise Plus | Remote Office |

|---|---|---|---|---|---|

| TCP L4 – L7 | NO | NO | YES | YES | YES |

| UDP | NO | NO | YES | YES | YES |

| HTTP | NO | NO | YES | YES | YES |

| Round Robin | NO | NO | YES | YES | YES |

| Source IP Hash | NO | NO | YES | YES | YES |

| Least Connections | NO | NO | YES | YES | YES |

| L7 Application Rules | NO | NO | YES | YES | YES |

| Health Checks – TCP, ICMP, UDP HTTP, HTTPs | NO | NO | YES | YES | YES |

| Connection Throttling | NO | NO | YES | YES | YES |

| High Availability | NO | NO | YES | YES | YES |

NSX-T has a management plane that is accessed through an NSX manager VM deployed in your hosting environment. You can provision NSX-T load balancers via the NSX manager GUI or the NSX API. Examples of automation tools that leverage the API include VMware vRealize Automation (vRA), Terraform, and VMware PowerCLI.

NSX Load balancer deployment architecture

NSX-T load balancers are deployed as tier-1 gateways on NSX edge appliances. What exactly does that mean? Let’s break it down piece-by-piece.

An NSX Tier-1 gateway is a virtual router inside of NSX. It has its own routing table, memory space, and virtual network interfaces that can connect to network segments you designate. Think of each load balancer as a virtual router with unique instances of those attributes.

NSX edge appliances are simply VMs or physical servers that provide a pool of CPU, memory, and network uplink capacity for NSX virtual routers / gateways.

“The CloudBolt team has been with us on this journey to self-service… This level of partnership and shared direction has enabled Home Depot to move faster, move further and continuously enhance our offerings to our Development Team customers.”

– Kevin Priest, The Home Depot

How NSX load balancers work

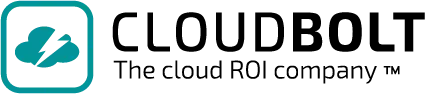

Now that we have defined what an NSX load balancer is and where it resides let’s talk more about load balancing itself. To begin, here are the key NSX load balancing components:

- Clients – These are the users or applications that will initiate traffic to our load balancer VIP.

- Virtual Server – A construct created on the load balancer to represent an application service.

- Server Pools – A group of identical server nodes that host the load-balanced application.

- Pool Members – An individual server that is configured to be part of a server pool.

- Health Check – A load balancer configuration that constantly assesses pool members’ health and determines when to remove or reinstate pool members based on defined criteria.

This diagram helps illustrate the relationship between the components.

Understanding one and two-armed load balancers

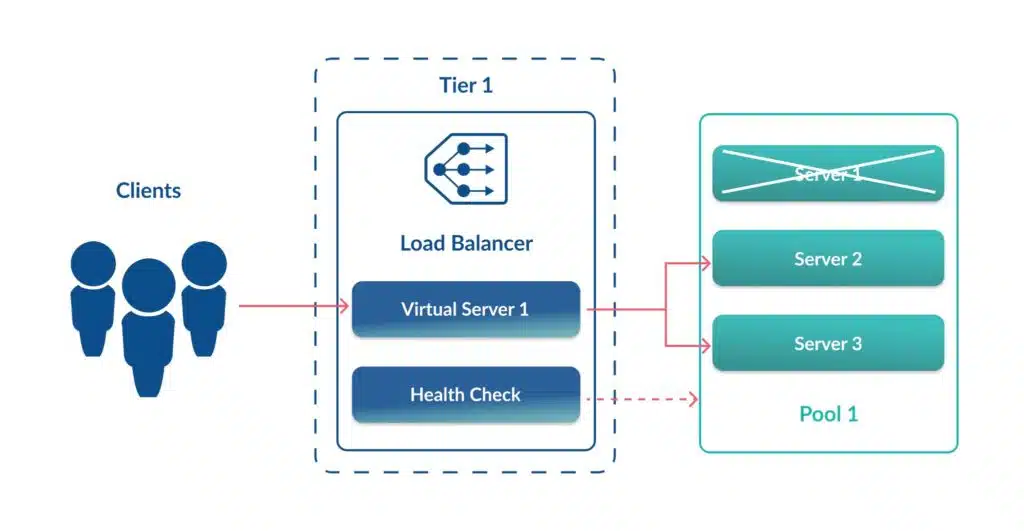

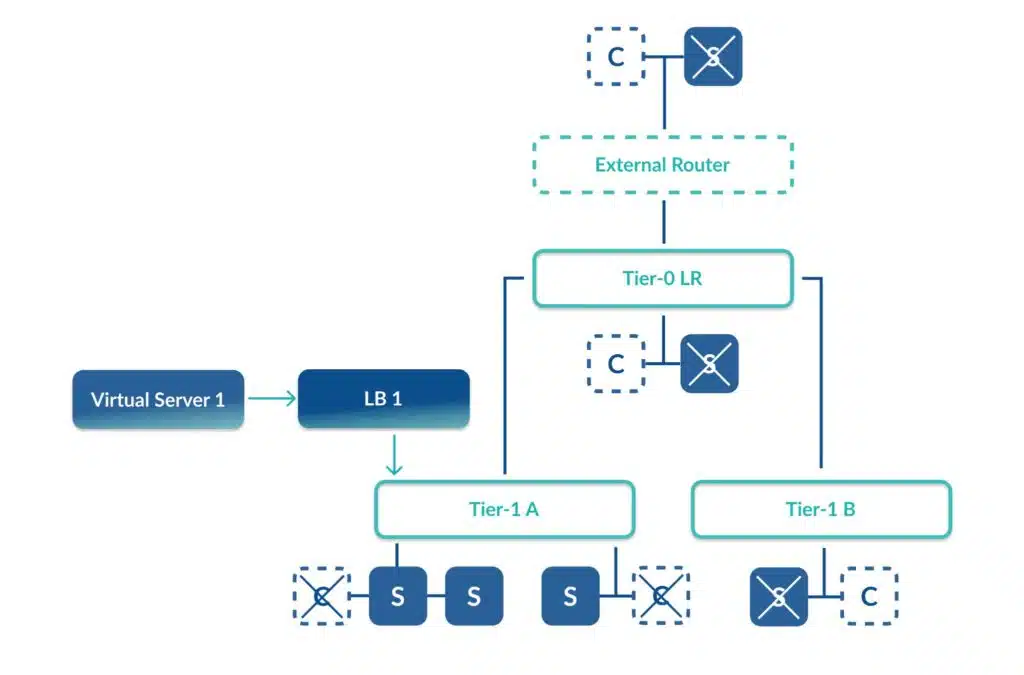

NSX load balancing supports two primary deployment topologies – one-armed and two-armed. Simply put, the load balancer can either be deployed adjacent to the workloads (one-armed) that it supports or it can act as the default gateway for our workloads (two-armed).

In the diagram below, we can see the one-armed NSX load balancer deployment model.

With a two-armed deployment, the load balancer also acts as our default gateway. In this configuration all traffic – whether load balanced or not – flows through the load balancer appliance.

The choice between one-armed and two-armed load balancing comes down to management vs. scalability. With a one-armed load balancer you can deploy numerous appliances to meet your needs. However, more appliances means more complexity in management. A two-armed deployment doesn’t have the same complexity, but is limited in scalability as it only allows for one appliance.

How VMware load balancing routes traffic

Load balancers make routing decisions using different calculations. For NSX load balancers, appliances route traffic using basic arithmetic load balancing, deterministic load balancing, or “least connections” load balancing. Let’s take a look at how each of those works.

Basic arithmetic load balancing (round robin)

With basic arithmetic load balancing the application service is accessible on a VIP that evenly distributes traffic. Each new inbound request will be distributed to the next pool member: server A, then B, then C, then repeat. This is the traditional “round robin” approach to load balancing.

With NSX, the load balancer also manages the health state of pool members and removes them if they are unhealthy. This is important because sending traffic to an unhealthy pool member would result in errors.

Health checks can determine health with simple ping tests where pool members are removed after a certain number of losses or more complex tests can be used to test HTTP header response, page checksum, or other custom criteria.

All traffic is evenly distributed under a round robin configuration model, but sometimes more advanced options are required and NSX load balancer supports several.

Deterministic load balancing (weighted)

Occasionally, you may want a disproportionate amount of traffic to go to certain pool members. You can achieve this by setting a weight for each server pool member. A weight will cause the load balancer to send a specific percentage of traffic to each pool member. Deterministic load balancing is useful when one server has more resources than others. However, in a virtual environment this scenario isn’t common.

Least connections load balancing

Traffic distribution based on simple mathematics works well in a stateless scenario where all connections are equal and downloading the exact same content. However, this method doesn’t work as well for stateful applications where we can’t guarantee all connections will use the same amount of resources.

For example, in the case of ecommerce websites where users will spend varying amounts of time, page counts, and shopping cart resources then we need a better solution. NSX load balancer offers us the algorithm of “least connections” for these cases. “Least connections” is exactly what it sounds like, it distributes traffic based on which pool members are currently servicing the fewest sessions.

Final thoughts

NSX load balancing is a big topic and can seem intimidating at first, but once you learn the pieces and parts, it’s actually very straightforward. Many IT organizations are able to achieve significant return on investment using virtual load balancers and in particular the NSX load balancer. These values come mostly from the ease of automating load balancer deployments and reduced licensing costs from legacy hardware platforms that carry the added expense for features that aren’t always utilized. In many

Related Blogs

What’s New in CloudBolt CMP: 2025 Roadmap Highlights

Cloud management is evolving fast — and so are we. At CloudBolt, we’ve made significant investments in our Hybrid Cloud…