A data lake is a single data repository that stores data until it is ready for analysis. Modern object storage services like Google Cloud Platform (GCP) Cloud Storage, Amazon Web Services (AWS) S3, and Azure Blob Storage provide flexible storage in cloud-based architectures. These services aggregate data from multiple sources without defining a schema and can store any type of data.

In this article, we will dive deeper into the pricing dimensions of GCP Cloud Storage and provide recommendations for optimizing costs when using it for a data lake containing structured and unstructured data.

Executive Summary

The primary purpose of a data lake is to store data from multiple different sources and different data types. Object storage, like GCP Cloud Storage, is suitable for this task. The main pricing dimensions for GCP Cloud Storage are:

GCP Cloud Storage Pricing Dimensions

| Dimensions | Pricing Units |

|---|---|

| Data storage volume | per GB per month Dependent on the GCP region Dependent on the Storage class:StandardNearlineColdlineArchive |

| Network usage | Ingress is freeEgress charged per GB divided into different classes:Network egress within Google Cloud, free within the same region locationSpecialty network servicesGeneral network usage |

| API operations | Charges apply when performing operations within Cloud Storage. GCP divide these into three categories: Class A – e.g., adding objects or listing objectsClass B – e.g., reading objectsFree – e.g., deleting objectsFor further details, see the section Operations that fall into each class.Based on per 10,000 operations Dependent on storage class, with operations against the Archive class being the most expensive |

| Retrieval / early deletion fees | per GB dependent on storage class |

The table above shows that all pricing dimensions for GCP Cloud Storage depend on the storage class used. The storage class affects the applicable pricing model because GCP links the cost of data storage and API operations to the underlying storage class.

Your total costs for using GCP Cloud Storage as a data lake depend on the volume of data ingested, stored, and analyzed. We will take a deeper look at these costs by considering which pricing dimensions apply from a GCP Cloud Storage perspective.

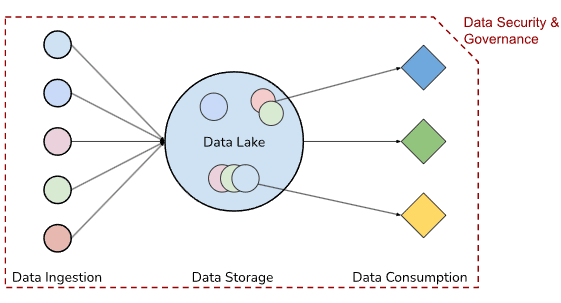

Data Lake Architecture

A cloud-based architecture for a data lake requires these components:

- Data Ingestion (filing the lake)

- Data Storage (the lake itself)

- Data Consumption (deriving value from the lake)

These components must work within your organization’s data security and governance framework. A secure framework defines who can use data and where it can be stored based on data privacy laws and confidentiality requirements.

Conversely, a data lake contains raw data, and it may be unclear how this data is to be used. A data warehouse is a repository for data that is structured to be ready for analysis.

The table below estimates GCP Cloud Storage costs based on data volume, ingestion, and consumption.

See the best multi-cloud management solution on the market, and when you book & attend your CloudBolt demo we’ll send you a $75 Amazon Gift Card.

GCP Cloud Storage Cost Estimates

| Component | Day 0 | Day 90 |

|---|---|---|

| Data volume | 1 TB | 5.39 TB |

| Data ingestion | 50 GB per day | 50 GB per day |

| Data consumption | 50 GB per month | 50 GB per month |

| Average file size of 50 MB estimates the total file count.At-rest storage pricing based on GCP Region, us-east4.The cost estimates are based on the pricing available at Cloud Storage pricing as of October 2022. GCP has announced pricing updates that will take effect on April 1, 2023. | ||

Data Ingestion

Data must be ingested into GCP Cloud Storage to fill your data lake. You may ingest data from the GCP Cloud Console via a Compute Engine virtual machine running in GCP or applications using GCP client libraries. Regardless of the source of data, from a GCP Cloud Storage perspective, you will incur network usage and make requests to the Cloud Storage APIs to write data. The table below details the applicable GCP Cloud Storage pricing considerations for data ingestion.

GCP Cloud Storage Pricing Considerations for Data Ingestion

| Pricing dimension | Pricing considerations |

|---|---|

| Network usage | Ingress is free, but the services used for data ingestion may incur costs. |

| API operations | To upload data will require requests to the storage.*.insert API operation, which is a Class A operation. Standard storage, $0.05 per 10,000 operationsArchive storage, $0.50 per 10,000 operationsThe number of API calls required will depend on the number of files (objects). Larger objects, greater than 100 GB, may benefit from using the multipart upload API, which will mean multiple API operations per object. |

For 1TB of data uploads, with an average file size of 50 MB, the estimated cost of the storage.*.insert API operations is:

- 1TB * 1024 * 1024 = 1,048,576 MB / 50 MB = 20,971.52 file operations

- For standard storage = 20,971.52 / 10,000 * 0.05 = $0.11

The estimated cost of adding this data directly to the archive storage class is:

- For archive storage cost = 20,971.52 / 10,000 * 0.50 = $1.05

In the pricing table above, we have assumed adding 50 GB of data per day to the data lake. The estimated cost for these API operations are:

- 50 GB * 1024 = 51200 MB / 50 MB = 1024 file operations

- For standard storage cost = 1024 / 10,000 * 0.05 = $0.005 per day

After 90 days, the estimated API operations costs of adding 50 GB per day are:

- 50 GB * 1024 * 90 days = 4,608,000 MB / 50 MB = 92,160 files operations

- Standard storage cost = 92,160 / 10,000 * 0.05 = $0.46

These estimates show that API operations costs are low compared with the data storage costs we’ll review in the next section.

|

Platform

|

Multi Cloud Integrations

|

Cost Management

|

Security & Compliance

|

Provisioning Automation

|

Automated Discovery

|

Infrastructure Testing

|

Collaborative Exchange

|

|---|---|---|---|---|---|---|---|

|

CloudHealth

|

✔

|

✔

|

✔

|

||||

|

Morpheus

|

✔

|

✔

|

✔

|

||||

|

CloudBolt

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

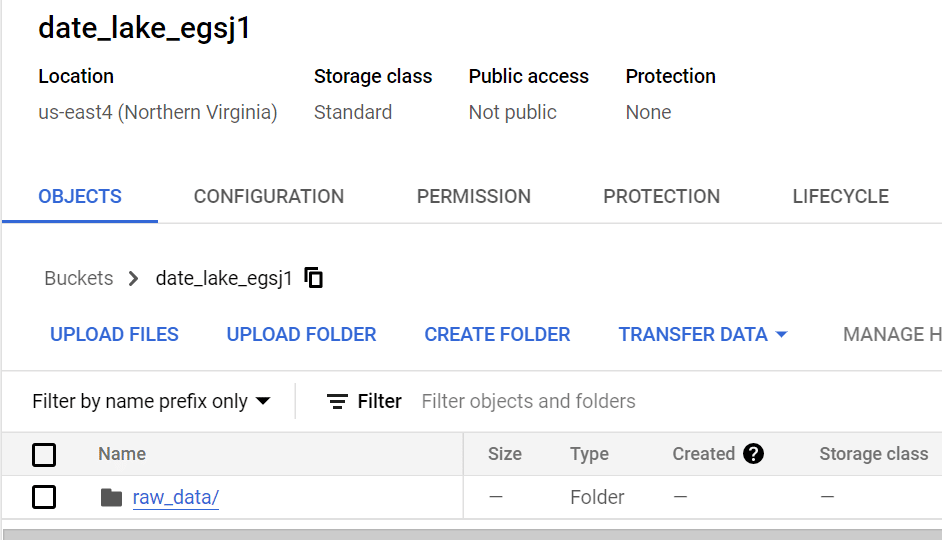

Data Storage

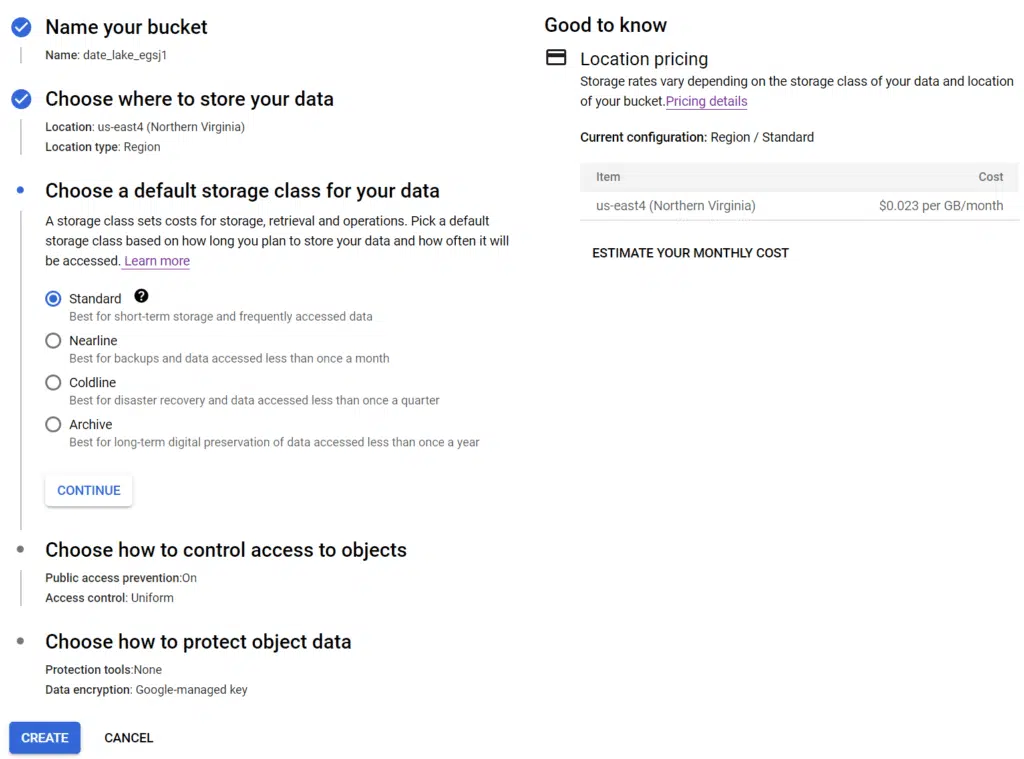

Data stored on GCP Cloud Storage will incur at-rest storage costs. Data at-rest is data not in transit or use. GCP calculates the cost based on the data volume, length of time stored, and the storage class used. The at-rest storage costs are in units of per GB per month.

GCP Cloud Storage Pricing Considerations for Data Storage

| Pricing dimension | Pricing considerations |

|---|---|

| Data storage volume | The total at-rest costs will depend on the data allocation between the different storage classes. At-rest storage costs also vary between GCP regions. For example,Storage ClassPricing, us-east4per GB per monthPricing, europe-west1 per GB per monthStandard storage$0.023 $0.020Archive storage$0.0025$0.0012 |

| API operations | With Object Lifecycle Management API operations make requests to the SetStorageClass operation, which is a Class A operation. The Class A rate associated with the object’s destination storage class applies.Destination Storage ClassClass A (per 10,000 operations)Nearline storage$0.10Coldline storage$0.10Archive storage$0.50 |

You can use Object Lifecycle Management to “downgrade” the storage class of objects that are older than N days or have not been accessed for X days to reduce your at-rest storage costs.

Downgrading from standard storage could move an object to the nearline, coldline, or archive storage class. In our example, after 90 days, there is 5.39 TB of data stored. The estimated cost of storing 5.39 TB for 30 days as standard storage is:

- 5.39 TB * 1024 = 5,519.36 GB * $0.023 per GB per month = $126.95

Given that only 50 GB of data is being analyzed per month, you can optimize the storage costs by downgrading from standard storage. The table below shows the at-rest storage pricing and the GCP recommended use case for the different storage classes. By using archive storage instead of standard storage, the at-rest storage costs decreased from $126.95 to $13.80.

GCP Storage Class Pricing and Costs

| Storage class | GCP recommendation | Pricing, us-east4 per GB per month | Cost of storing 5.39 TB for 30 days |

|---|---|---|---|

| Standard storage | “Hot” data that is frequently accessed or only stored for limited time. | $0.023 | $126.95 |

| Nearline storage | “Warm” data that is accessed on average once per month or less | $0.013 | $71.75 |

| Coldline storage | “Cool” data that is Accessed at most once a quarter | $0.006 | $33.12 |

| Archive storage | “Cold” data that is accessed less than once a year | $0.0025 | $13.80 |

Changing the storage class of a file (object) will involve an API call and the cost is based on the Class A rate associated with the object’s destination storage call. For example, we can estimate the cost of changing all 5.39 TB from standard storage to archive storage:

- 5.39TB * 1024 * 1024 = 5,651,824.64 / 50 MB = 113,037 file operations

- SetStorageClass to archive operations = 113,037 / 10,000 * 0.5 = $5.65

With “downgraded” storage classes, it is important to note the minimum storage durations. The current minimum durations are below.

GCP Minimum Storage Durations

| Storage class | Minimum duration |

|---|---|

| Standard storage | None |

| Nearline storage | 30 days |

| Coldline storage | 90 days |

| Archive storage | 365 days |

If an object is deleted, replaced, or moved before the minimum storage duration, you will be charged as if the object was stored for the minimum duration. For example, if you delete 500 GB of data in archive storage after 185 days, the early deletion fee would be for the remaining 180 days (365 – 185 = 180 days),

- $0.0025 per GB per month * 500 * (180/30) months = $7.50

The early deletion fee is equivalent to the cost of storing the data for the remaining 6 months.

Data Consumption

Using data from your data lake will involve API operations to read the data and find files to be analyzed. The costs associated with network usage will depend on whether the data is being analyzed within the same GCP region as the storage account.

Depending on the storage class of the file requested, there may also be retrieval fees.

GCP Cloud Storage Pricing Considerations for Data Consumption

| Pricing dimension | Pricing considerations |

|---|---|

| API operations | The most likely API operations will be,Listing data, storage.objects.list Class A operationReading data, storage.*.get Class B operationStorage ClassClass A(per 10,000 operations)Class B(per 10,000 operations)Standard storage$0.05$0.004Nearline storage$0.10$0.01Coldline storage$0.10$0.05Archive storage$0.50$0.50 |

| Retrieval fees | A retrieval fee applies when you read, copy, move, or rewrite object data or metadata that is stored using nearline storage, coldline storage, or archive storage.Storage classRetrieval RatesStandard storageFreeNearline storage$0.01 per GBColdline storage$0.02 per GBArchive storage$0.05 per GB |

| Network usage | Egress represents data sent from Cloud Storage.GCP calculates the cost of egress based on the following cases:Egress casePricing considerationsNetwork egress within Google CloudPricing is determined by the bucket’s location and the destination locationSpecialty network servicesBased on Google Cloud network products, e.g. Cloud CDN pricingGeneral network usageApplies when data moves from a Cloud Storage bucket to the Internet |

To find and read data will require calling the storage.objects.list and storage.*.get API operations. To read 50 GB of data from standard storage, the estimated cost is:

- 50 GB * 1024 = 51200 MB / 50 MB = 1024 file operations

- Standard storage, storage.*.get cost = 1024 / 10000 * 0.004 = $0.0004

If the data is read from archive storage, the estimated cost is:

- Archive storage, storage.*.get cost = 1024 / 10000 * 0.5 = $0.05

A retrieval fee applies when you read, copy, move, or rewrite object data or metadata that is stored using nearline storage, coldline storage, or archive storage. For example, if the data that you need to analyze is being stored in archive storage, then a retrieval fee will apply. For 50GB per month, the estimated cost is:

- 50GB * $0.05 = $2.50

The estimated difference in storage cost between standard storage and archive storage for storing 5.39 TB for 30 days was $126.95 – $13.80 = $113.15

Using this cost, we can estimate how much data can be retrieved from the archive storage class:

- $113.15 / $0.05 = 2,263 GB / 1024 = 2.21 TB

Given that the GCP archive storage class provides access to your data within milliseconds, the archive storage class is a cost-efficient and flexible storage class for data lake architectures. The retrieval times differ from the Microsoft Azure Archive tier, where it can take up to 15 hours to retrieve (rehydrate) a blob, and AWS S3 Glacier where Deep Archive can take up to 48 hours for bulk retrieval. AWS S3 Glacier Instant Retrieval also provides within milliseconds access to your data.

To avoid network egress charges, your data consumption workloads should run within the same location as your GCP Cloud Storage bucket. Data moves within the same location are free. For example, reading data in a us-east4 bucket to create a US BigQuery dataset is free. However, if you were reading data in a us-east4 bucket with a Compute Engine instance in Europe, then egress charges apply.

Data Security and Governance

Your data lake architecture must work within your data security and governance framework. Your framework should set out data residency and data retention policies, which may restrict which GCP regions you can use. This will be relevant for data storage costs as they vary by GCP region.

Before deciding on your bucket location, review the GCP documentation at Bucket locations | Cloud Storage within the context of your own data security and governance framework.

GCP Cloud Storage integrates with Identity and Access Management (IAM) to manage who has access to the data in your data lake. IAM is a free service, GCP only charges for the GCP services being used with IAM. For more information on using IAM with GCP Cloud Storage visit IAM permissions for Cloud Storage.

Pricing Comparisons

The following table compares the cost of object storage between GCP, AWS and Microsoft Azure. The price per GB per month for the different storage classes within similarly located regions is:

GCP vs. AWS vs. Azure Cloud Storage Pricing

| Storage class | GCP Cloud Storage, Northern Virginia (us-east4) | AWS S3, US East (N. Virginia) | Azure Blob Storage, East US |

|---|---|---|---|

| Per GB per month | |||

| Data storage volume, Hot Storage | $0.023 Standard | $0.023 Standard | $0.0208 LRS$0.026 ZRS |

| Data storage volume, Cool Storage | $0.013 Nearline$0.006 Coldline | $0.0125 Infrequent Access | $0.0152 LRS$0.019 ZRS |

| Data storage volume, Archive Storage | $0.0025 Archive | $0.004 per GB Archive Instant Access$0.00099 per GB Deep Archive | $0.00099 LRS |

For some storage classes, multiple prices are shown, which complicates finding the right solution. For example, Azure Blob Storage Hot Storage shows prices for Locally Redundant Storage and Zone Redundant Storage. LRS Azure Blob Storage copies your data synchronously three times within a single physical location in the primary region, whereas ZRS copies your data synchronously across three Azure availability zones in the primary region.

Hot Storage on GCP stores data redundantly on multiple devices across a minimum of two Availability Zones (AZs). AWS stores data redundantly on multiple devices across a minimum of three Availability Zones (AZs). This means that the Standard storage on GCP and AWS is more comparable to Azure ZRS rather than LRS.

It is important to look beyond the pricing and consider whether the durability and data protection is suitable for your workload. Your data security and governance framework may require that data be redundantly stored across multiple physical locations, which would exclude using Azure LRS.

Recommendations

Below are three ways you can optimize your GCP storage costs.

Use Object Lifecycle Management and Storage Classes

It is recommended to use Object Lifecycle Management to optimize your data storage costs. In the above examples, the cost savings of using archive storage are significant.

In some data lakes, the raw data is transformed into formats that are easier to analyze. If the raw data is no longer required, then standard storage provides the flexibility to delete the data without incurring early deletion fees. If the raw data needs to be kept, “downgrade” the storage class to take advantage of lower at-rest storage costs after transformation.

Take Advantage of Regional Pricing Differences

The cost of data storage varies between the GCP regions. If your data security and governance framework is flexible, pick a GCP region that provides the lowest at-rest data storage pricing.

You should also periodically review pricing to be aware of any pricing updates. For example, GCP recently announced pricing updates for Cloud Storage that will take effect in April 1, 2023.

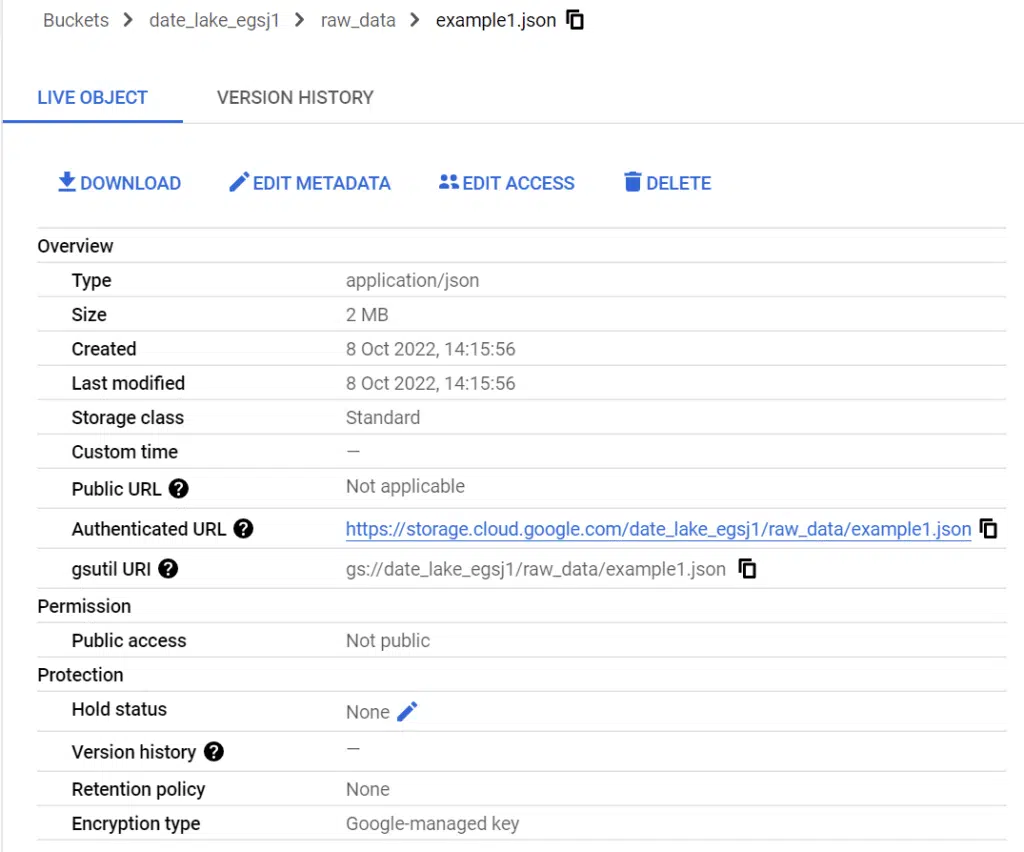

Organize Your Data

Organizing your data facilitates efficient data consumption and enables creation of lifecycle conditions for Object Lifecycle Management.

For example, a matchesPrefix condition can change the storage class for all raw data. To achieve this, your raw data needs to be organized at a top-level within your bucket like gs://example_bucket/raw_data/ then the condition “matchesPrefix: [“raw_data/”] will apply to all files under raw_data/. Using this with an age condition could help archive the raw_data/ after analysis.

Although GCP Cloud Storage has no notion of folders, organizing your data within a hierarchy will help you locate data of interest. For example, if using the BiqQuery Data Transfer Service for Cloud Storage then consider the Wildcard support for Cloud Storage URIs when organizing your data.

Conclusion

GCP Cloud Storage provides flexible object storage for data lake architectures. To estimate your costs, you need to consider the data storage volume, network usage, API operations class, retrieval costs, and early deletion fees.

We have seen that the API operations cost of ingesting data is negligible compared to the at-rest storage costs. By using an alternative storage class to standard storage, your at-rest storage costs are reduced. Even the lowest cost, archive storage class, still provides effectively instant access to your data. However, the nearline, coldline, and archive storage classes are subject to minimum storage duration.

For cost-efficient data consumption, these workloads should run within the same location as your GCP Cloud Storage bucket, and you should consider whether your data organization facilitates Object Lifecycle Management and finding data.

Your data lake architecture should fit within your own organization’s data security and governance framework.

Related Blogs

The End of Manual Optimization: Why We Acquired StormForge

Today is a big day for CloudBolt—we’ve officially announced our acquisition of StormForge. This marks a major milestone for us…