Kubernetes is an open-source platform developed by Google for managing containerized workloads. Containerization solves the problem of traditional application deployment by making apps independent of any underlying infrastructure. Since they are loosely coupled, applications become easy to replicate across cloud providers and operating system (OS) distributions. Hosted Kubernetes – A Strategic Overview provides more context on the history and inner workings of Kubernetes.

Google Kubernetes Engine (GKE) is a Kubernetes platform for easy application scaling and maintenance. There are two operational modes: autopilot and standard. The former manages the entire cluster and node infrastructure, whereas the latter provides configuration flexibility and complete control over how clusters and nodes are handled. Both modes have their pricing schemes, which are the subject of this article.

Executive summary

We will be discussing the following concepts, summarized below for your reference:

| Standard mode | allows users to control the underlying node infrastructure |

| Autopilot mode | provisions and manages node infrastructure based on the user’s requirements |

| Cluster management fee | flat fee paid per hour regardless of cluster mode, topology, and regionality |

| Multi-cluster-ingress | mechanism that allows load-balancing amongst clusters in different regions |

| GKE Backups | service for taking and preserving cluster snapshots |

GKE pricing

The pricing for GKE is driven primarily by the chosen mode (Standard or Autopilot). Standard is charged based on the configuration of the machines being used and is subject to Compute Engine pricing. Autopilot has a more defined price table where the user is unconcerned with technical details. Regardless of mode, there will always be a cluster management fee. When applicable, there are also multi-cluster ingress and GKE backup fees.

Note that there are slight differences in pricing between regions. All price tables in this article will be based on Iowa (us-central1). The cluster management fee of $0.10 per hour applies to all GKE clusters.

Standard mode

Standard mode allows users to configure clusters and their underlying infrastructure. Users have maximum freedom but are responsible for cluster management.

Cluster topology can be zonal or regional. The Zonal cluster control plane runs in the same zone as their nodes and – unless they are multi-zonal – have no replicas. Regional clusters have node replicas across multiple zones. The topology does not directly affect pricing but does impact availability.

Since standard mode uses Compute Engine instances as worker nodes, billing is primarily dictated by Compute Engine pricing. The default pricing scheme is on-demand, where instances are billed per second. Users can obtain price reductions using committed-use discounts, agreeing to a one-year or three-year contract, or via spot instances.

A machine’s pricing depends on its type, which caters to specific use cases. The five machine types describe how many vCPUs and how much memory is allocated to a VM and are as follows:

- General-purpose

- Compute-optimized

- Memory-optimized

- Accelerator-optimized

- Shared-core

The pricing for each machine type differs and can be found here. The price table you can see below is for the E2 machine type.

| Item | On-demand price | Spot price | 1-year commitment price | 3-year commitment price |

|---|---|---|---|---|

| Predefined vCPUs | 0.021811 per vCPU per hour | 0.006543 per vCPU per hour | 0.013741 per vCPU per hour | 0.009815 per vCPU per hour |

| Predefined memory | 0.002923 per GB per hour | 0.000877 per GB per hour | 0.001842 per GB per hour | 0.001316 per GB per hour |

See the best multi-cloud management solution on the market, and when you book & attend your CloudBolt demo we’ll send you a $75 Amazon Gift Card.

Autopilot mode

This mode fully handles the management of clusters and can be used to deploy and maintain them quickly and easily. However, this mode provides little flexibility in terms of the underlying infrastructure.

Autopilot mode also features Spot Pods for fault-tolerant workloads, which work precisely like Spot VMs. In this case, you can run pods at a lower price but at the risk of pods being evicted if other standard pods require the resources.

Concerning pricing, Standard mode operates on a pay-per-node basis, and Autopilot runs on a pay-per-pod basis. Users are billed based on the pods’ CPU, memory, and ephemeral storage requests.

There are two compute classes for Autopilot pods:

- general-purpose

- scale-out

Users can specify the compute class they wish to use for their workloads. General-purpose is the default class and is best used for medium-intensity workloads such as web servers, small to medium databases, or development environments.

Scale-out machines have simultaneous multi-threading turned off and are optimized for scale. They best suit intense workloads like containerized microservices, data log processing, or large-scale Java applications.

The price table for General-purpose pods can be found below.

| Item | Regular price | Spot price | 1-year commitment price | 3-year commitment price |

|---|---|---|---|---|

| vCPU per hour | 0.0445 | 0.0133 | 0.0356 | 0.0244750 |

| Pod Memory per GB-hour | 0.0049225 | 0.0014767 | 0.0039380 | 0.0027074 |

| Ephemeral Storage GB-hour | 0.0000548 | 0.0000548 | 0.0000438 | 0.0000301 |

For comparison, here is the price table for Scale-out compute class pods.

| Item | Regular price | Spot price | 1-year commitment price | 3-year commitment price |

|---|---|---|---|---|

| Arm Pod vCPU per hour | 0.0356 | 0.0107 | 0.02848 | 0.01958 |

| Arm Pod Memory per GB-hour | 0.003938 | 0.0011814 | 0.0031504 | 0.0021659 |

| Pod vCPU per hour | 0.0561 | 0.0168 | 0.04488 | 0.030855 |

| Pod Memory per GB-hour | 0.0062023 | 0.0018607 | 0.0049618 | 0.0034113 |

Multi-cluster ingress

A multi-cluster ingress is a service that load-balances traffic across Kubernetes clusters in different regions. Anthos is a Google Cloud service that consolidates other services that can be either internal or external to Google Cloud. If the Anthos API is enabled, then Multi-Cluster Ingress is billed under Anthos. Otherwise, standalone pricing applies at $0.0041096 per backend Pod per hour.

Backups

The billing for a GKE backup depends on two dimensions:

- backup management

- backup storage

Backup management costs $1 per month per pod, and storage costs $0.028 per GB per month. So if a user backs up 10 pods on a certain month with 20 GB worth of data, the calculation would be as follows:

Backup management fee = $1 x 10 pods = $10 per month

Backup storage fee = $0.028 x 20 = $0.56 per month

Total = $10 + $0.56 = $10.56 per month

Example pricing scenario and calculation

A user would like to provision a node pool for their web application. It should be available 24/7 with little to no downtime. The expected workload is not too heavy, so a machine with four vCPUs and 15GB RAM should be able to handle all requests. The user would like to compare the cost difference between GKE Standard and GKE Autopilot.

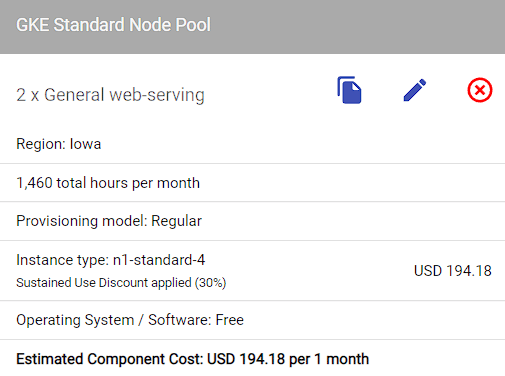

Here is what the cost breakdown looks like based on the GCP pricing calculator:

Based on the compute engine price table, an n1-standard-4 instance costs $0.189999 per hour. The sustained use discount applies because our clusters are running 24/7. The estimated discount is around 30%.

Base price: 2 instances x 730 hours x $0.189999 = $277.39854

After discount: $277.39854 x 0.7 = $194.18 per month

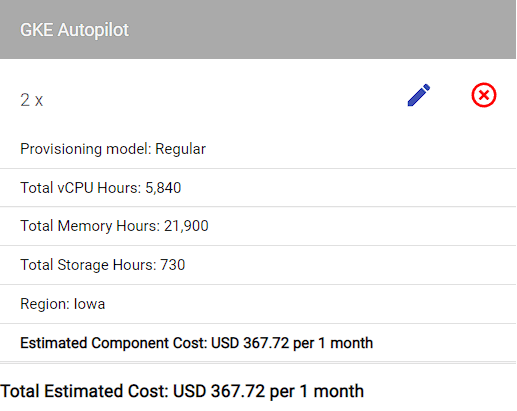

The cost breakdown for the equivalent Autopilot version would be:

Note that we are assuming the same number of resources as the n1-standard-4 machine type used in the Standard mode calculation. 2 instances with 4 vCPUs and 15 GB memory. Ephemeral storage is set to 0.5 GB.

vCPU: 2 instances x 4 vCPUs x 730 hours x $0.0445 = $259.88

Memory: 2 instances x 15 GB x 730 hours x $0.0049225 = $107.80275

Ephemeral storage: 2 instances x 0.5 GB x 730 hours x $0.0000548 = $0.04

Total: $259.88 + $107.80275 + $0.04 = $367.72 per month

|

Platform

|

Multi Cloud Integrations

|

Cost Management

|

Security & Compliance

|

Provisioning Automation

|

Automated Discovery

|

Infrastructure Testing

|

Collaborative Exchange

|

|---|---|---|---|---|---|---|---|

|

CloudHealth

|

✔

|

✔

|

✔

|

||||

|

Morpheus

|

✔

|

✔

|

✔

|

||||

|

CloudBolt

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

Comparison across cloud providers

AWS EKS (Elastic Kubernetes Service)

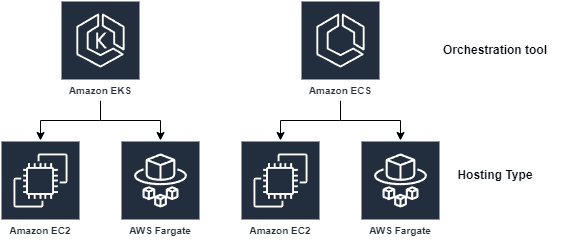

There are two main orchestration tools for containerized applications on AWS:

Each orchestration tool has two hosting types:

- AWS EC2: the counterpart of GCP Compute Engine

- AWS Fargate: the counterpart of GCP GKE Autopilot

The main difference between EKS and ECS is that the former is built explicitly on Kubernetes, whereas the latter is explicitly built for AWS. That means that the direct counterpart for GCP GKE is AWS EKS.

There is a $0.10 per cluster per hour flat fee similar to GCP GKE’s cluster management fee. If the hosting type used is Amazon EC2, then billing would depend on EC2 pricing, identical to GCP GKE’s Standard mode, where the cost ultimately depends on the VMs.

AWS EC2 has a pricing table for each instance type wherein per-second billing applies. There are also pricing structures such as on-demand, savings plans, reserved, and spot instances.

AWS Fargate is very similar to GKE Autopilot because it follows the principle of only paying for resources. The pricing table is as follows:

| Per vCPU per hour | $0.012144 |

| Per GB per hour | $0.0013335 |

Here is a sample comparison between AWS Fargate and GKE Autopilot based on regular pricing.

| Resource | Quantity | Hours per month | AWS Fargate rate | GKE Autopilot rate | AWS Fargate total per month | GKE Autopilot total |

|---|---|---|---|---|---|---|

| vCPU | 4 | 730 | $0.012144 | $0.0445 | $35.46048 | $129.94 |

| GB | 8 | 730 | $0.0013335 | $0.0049225 | $7.78764 | $28.7474 |

Azure AKS (Azure Kubernetes Service)

Unlike GCP and AWS, Azure AKS does not have a cluster management fee. However, there is an option to pay for an Uptime SLA, which costs $0.10 per cluster per hour. There is no fully managed service similar to GKE Autopilot or AWS Fargate; therefore, the Azure AKS cost is based solely on VM pricing. Users can save money by using 1-year or 3-year instances and spot instances.

| Savings vehicle | Estimated savings |

|---|---|

| 1-year reservation | 48% |

| 3-year reservation | 65% |

| Spot instances | 90% |

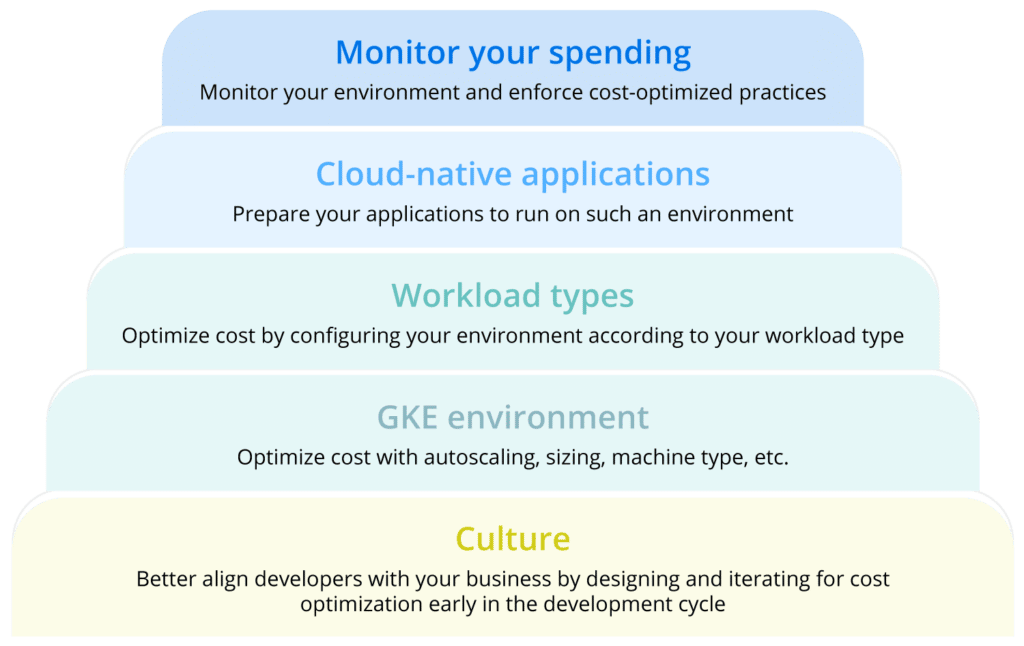

Recommendations for cost optimization

The following are best practices for cost optimization recommended by Google.

Monitor your spending

Cost optimization ultimately boils down to proper monitoring. Features like recommendations, alerts, and quotas keep projects within budget. For GKE specifically, you have the following options:

- use the monitoring dashboard

- refer to GKE usage metering

- use Metrics Server to examine metrics that determine autoscaling behavior

- use quotas

- use Anthos Policy Controller

- enforce policy constraints in your CI/CD pipeline

One third-party tool made for this purpose is CloudBolt.

Configure Kubernetes applications correctly

The best features of GKE are also the most cost-effective. For instance, vertical and horizontal autoscaling is the most reliable cost-saving mechanism because it’s automatically optimized for the current workload. To start though, you must understand the application’s concurrency by undergoing stress tests. Stress tests help reveal the most likely scenarios cost-wise during peak usage.

Next, once that baseline has been established, you can define the PodSpec accurately (if using Autopilot) or choose the most appropriate machine type (if using Standard). That way, resource allocation does not become a hit-or-miss affair. If the initial configurations aren’t precisely correct, it shouldn’t be a problem because, in the cloud, it’s easy to reconfigure and scale.

Use GKE cost-optimization features and options

GKE itself offers multiple ways to save on costs. Aside from fine-tuning autoscaling based on the baseline mentioned above, you can:

- locate clusters in cheaper regions provided that latency is bearable

- leverage committed-use discounts

- use a multi-tenancy strategy for smaller clusters to save on add-on costs

- review how clusters in different regions send and receive traffic

Promote a cost-saving culture

Cost optimizations can come from promoting a frugal mindset, so employees should understand costing mechanics to help enforce efficiency. To cement the point, users should know how charges are calculated to recognize how their decisions can contribute to overall costs.

Conclusion

To recap, GCP GKE has two modes: standard and autopilot. Standard allows users to configure their underlying infrastructure, and billing depends on the machine types of the worker nodes. The latter, Autopilot, is a fully managed service where users only have to define a PodSpec and don’t have to worry about other technical details. The billing for GKE Autopilot depends on how much vCPU and memory are requested.

Several GKE features contribute to cost-effectivenesses, such as autoscaling, load-balancing, and monitoring. One example is the option to use Spot VMs/Pods, where users can benefit from cheaper pricing provided their workloads are fault-tolerant. Whatever options you choose, promoting a sense of efficiency and an understanding of cost structures helps developers contribute to cost-cutting processes and allows for the best utilization of funds.

Related Blogs

The End of Manual Optimization: Why We Acquired StormForge

Today is a big day for CloudBolt—we’ve officially announced our acquisition of StormForge. This marks a major milestone for us…