Updated on November 27, 2024

Managing costs in Google Cloud Platform (GCP) is a complex yet vital task for businesses leveraging its powerful infrastructure. From compute and storage to BigQuery and cloud databases, GCP provides the flexibility to scale and innovate—but without a strategic approach to cost management, organizations risk overspending.

GCP’s pay-as-you-go pricing model offers inherent advantages, but dynamic costs can scale rapidly. Native tools like Billing Reports, Recommendation Hub, and Storage Lifecycle Policies lay the foundation for cost optimization.

However, maximizing financial efficiency often requires more than just reactive adjustments. Augmented FinOps—combining AI-driven insights, automation, and continuous monitoring—allows businesses to streamline their GCP spend while ensuring performance and innovation.

This guide explores GCP cost optimization strategies, practical best practices, and how advanced platforms can augment native GCP tools to drive cost control across multi-cloud environments.

Why is GCP Cost Optimization Important?

Financial Control

The pay-as-you-go model of GCP offers flexibility but can quickly lead to runaway costs if left unchecked. Without careful monitoring, organizations might overspend on idle resources, underutilized instances, or poorly configured services. Effective cost optimization strategies provide the tools and insights to identify these inefficiencies and reduce waste. By establishing cost guardrails and leveraging automated monitoring, businesses can maintain predictable budgets, prevent unnecessary expenses, and allocate their financial resources more effectively.

Operational Efficiency

In dynamic cloud environments, resource demands can shift rapidly, making it challenging to align capacity with actual workload requirements. Overprovisioning—while often done to avoid performance lags—can result in inflated bills without tangible benefits. GCP cost optimization ensures that resources are right-sized to meet demand, enabling teams to focus on innovation rather than chasing inefficiencies. By using automation and proactive management tools, organizations can scale resources seamlessly to accommodate spikes in demand, ensuring uninterrupted performance while minimizing waste.

Improved ROI

Every dollar saved on cloud costs is a dollar that can be reinvested into strategic initiatives. By optimizing GCP expenses, businesses can redirect funds toward projects that drive growth, innovation, or operational improvements. Whether it’s funding a new AI initiative, enhancing customer experiences, or streamlining operations, improved ROI is a natural byproduct of effective cost optimization. Additionally, granular visibility into spending helps decision-makers prioritize investments with the highest potential impact, ensuring the organization gets the most value from its cloud budget.

Scalability and Flexibility

GCP’s ability to scale resources on demand is one of its greatest strengths, but without proper optimization, this scalability can become a double-edged sword. Mismanaged scaling can result in overspending or underperformance, limiting the cloud’s effectiveness. Cost optimization strategies ensure that organizations retain the flexibility to adapt to changing business needs without overspending. By leveraging predictive analytics and automated scaling solutions, businesses can confidently expand their cloud footprint, knowing their costs are well-managed and aligned with operational goals.

GCP cost optimization best practices

| Best Practice | Description |

| Streamline compute usage with rightsizing and scheduling | Reduce overprovisioned resources by identifying idle GCE instances and unattached disks using GCP’s Recommender. Right-size instances by aligning resource configurations with workload requirements, and automate schedules to power down non-critical instances during off-hours, reducing runtime expenses significantly. |

| Enhance scalability with autoscaling | Leverage GCP’s autoscaling capabilities to dynamically adjust resources based on workload demands. Managed Instance Groups (MIGs) automatically scale resources up or down using policies tied to performance metrics like CPU utilization or network traffic, ensuring efficiency and cost savings. |

| Maximize storage efficiency with tiering and policies | Analyze storage usage patterns and migrate data to cost-effective storage tiers such as Nearline, Coldline, or Archive. Configure lifecycle management policies to automate the movement of data between tiers based on access frequency, ensuring storage alignment with usage patterns while minimizing manual oversight. |

| Reduce BigQuery costs with smarter analysis and storage choices | Select the appropriate pricing model, such as on-demand or BigQuery Editions, using GCP’s Slot Recommender. Transition to physical storage for better compression and implement guardrails to limit query size and frequency. These strategies ensure data analysis remains cost-effective while retaining performance. |

| Leverage committed use, sustained use discounts, and Preemptible VMs | Use Sustained Use Discounts (SUDs) for consistent usage or Committed Use Discounts (CUDs) for predictable workloads. Conduct workload forecasting using advanced modeling tools to align commitments with actual demand, ensuring predictable cost savings without overprovisioning. |

| Control logging costs with retention and exclusion policies | Use exclusion filters to avoid unnecessary log ingestion and storage, and configure retention policies to purge older logs. Limit the number of log sinks to reduce routing costs, and monitor logging volume regularly to refine configurations as operational needs evolve. |

| Cultivate a cost-conscious culture | Establish governance boards to provide centralized oversight and enforce cost optimization best practices. Share cost data and actionable insights across departments to foster collaboration, reduce duplication, and create accountability. Empower teams with transparency and financial discipline for ongoing optimization. |

Streamline compute usage with rightsizing and scheduling

Compute services like Google Compute Engine (GCE) and Google Kubernetes Engine (GKE) often dominate GCP bills, but careful management can significantly reduce unnecessary expenses. Idle resources, such as underutilized instances or unattached disks, are a common source of waste. Using tools like GCP’s Recommender, organizations can analyze historical usage patterns to pinpoint these idle assets.

For example, a virtual machine running at only 5% CPU utilization might be flagged for review. By deallocating such resources, organizations can achieve immediate cost savings and foster better long-term financial discipline.

Another effective strategy is rightsizing, which involves aligning resource configurations—like CPU, memory, and disk space—with actual workload requirements. Pursuing cost saving aggressively by going with smaller instances than required will result in a performance impact that may lead to a loss for the business. At the same time, going with instance sizes much larger than necessary will increase cost with no real benefit in performance.

A good rule of thumb is to aim for a size that offers 5-10% more resources than required for a use case at its peak utilization. However, this will only work for use cases where normal and peak utilization aren’t very far apart. Otherwise, you will end up with a very expensive instance that will only be used to its fullest extent for a short peak utilization period and underutilized the rest of the time.

GCP offers instances that use a feature called CPU bursting that allows instances to use more CPU than allocated for a short period of time. This feature is especially handy for use cases where resource consumption is even throughout the day with an occasional spike in utilization. This spike can be taken care of by the CPU burst, which will be available if the instance has built up “token,” which is another word for credit, by remaining under 100% of the utilization level overall.

Organizations can also optimize compute costs by automating the scheduling of non-critical environments, such as development or testing systems. These instances often do not need to run 24/7. By implementing start/stop schedules that align with operational hours, teams can significantly lower runtime expenses. For instance, powering down development instances during nights and weekends can reduce weekly costs by as much as 70%. Automation tools make these schedules seamless to execute, ensuring consistent savings with minimal manual effort.

Advanced platforms like CloudBolt enhance compute cost optimization by integrating real-time insights and actionable recommendations into day-to-day operations. With features like chatbot-driven analysis, teams can instantly identify idle resources or receive tailored rightsizing suggestions. This streamlines the optimization process, enabling organizations to maximize their GCP investments while maintaining operational agility.

Enhance scalability with autoscaling

Autoscaling dynamically adjusts the number of virtual machines in your deployment based on workload demands, ensuring optimal resource usage while minimizing costs. For example, if your application requires two instances with 2vCPU and 8GB of RAM during peak hours but only one instance during off-peak times, autoscaling ensures you’re only charged for the resources you use. This flexibility reduces idle capacity and prevents unnecessary expenses while maintaining application performance.

GCP’s Managed Instance Groups (MIGs) make this process seamless by enabling automated scaling decisions through predefined policies that monitor key metrics, such as CPU utilization, network throughput, or custom application metrics. For a web application experiencing periodic traffic spikes, autoscaling allows the system to increase the number of virtual machines during high demand while scaling down during quieter periods. This dynamic adjustment not only saves costs but also ensures users experience consistent performance.

Implementing autoscaling begins with configuring scaling policies in MIGs that respond to real-time workload data. A typical starting point involves setting CPU utilization thresholds, allowing the system to scale resources up or down based on predefined limits. Over time, as organizations gain more insights into their usage patterns, these policies can be refined to achieve even greater cost efficiency.

Maximize storage efficiency with tiering and policies

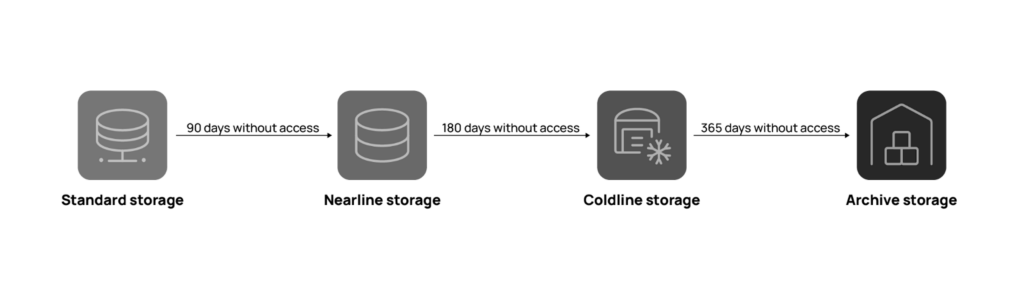

Storage is another significant contributor to GCP costs, and optimizing it begins with choosing the appropriate storage class. Google Cloud Storage offers multiple classes—Standard, Nearline, Coldline, and Archive—each suited to different use cases.

| Storage Class | Cost (per GB/month) | Retrieval Fees | Use Case |

| Standard | Highest | None | Frequently accessed, low latency required |

| Nearline | Moderate | Yes | Accessed less than once a month |

| Coldline | Low | Yes | Infrequently accessed, long-term storage |

| Archive | Lowest | Yes | Compliance and archival data |

The Standard class, while ideal for frequently accessed data, is often overused due to its default setting. By analyzing data usage patterns, teams can transition infrequently accessed data, like backups, to cheaper options such as Nearline or Coldline without affecting performance. For example, moving archival data to Coldline Storage can result in substantial cost savings while still providing accessible storage for disaster recovery or compliance needs.

GCP also offers three location options to store data that provide different levels of redundancy and performance. The standard regional option provides redundancy in the same geolocation by saving multiple copies of the data in the same data center. However, if users are scattered in various parts of the world, serving them copies of data from a single source might not be ideal in terms of both redundancy and performance. This is where dual-region and multi-region location options can be considered. Different location options are also available for backups. Multi- and dual-region options shouldn’t be used without a good reason, as they are more than twice the storage cost. The standard region location option is always the choice when there is no particular use case for enhanced redundancy and performance considerations.

To further control storage costs, organizations should take advantage of lifecycle management policies. These policies automate the movement of data between storage classes based on predefined criteria, reducing the need for manual oversight. For instance, a policy might automatically shift data from Standard to Nearline if it hasn’t been accessed for 90 days. This level of automation minimizes errors and ensures that storage tiers are always aligned with usage patterns, driving efficiency while reducing costs.

By integrating advanced cost optimization platforms, teams can gain even deeper insights into their storage usage. These tools can identify storage inefficiencies, suggest appropriate lifecycle rules, and track cost savings over time. This combination of intelligent decision-making and automation allows organizations to stay on top of their storage spending.

Reduce BigQuery costs with smarter analysis and storage choices

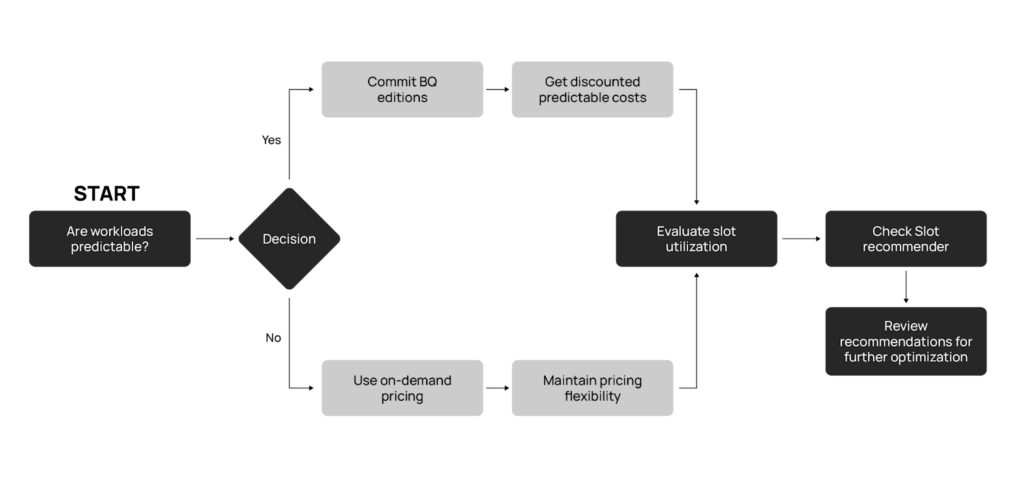

BigQuery is a powerful tool for processing and analyzing large datasets, but its costs can escalate quickly without proper management. Query analysis costs are determined by the amount of data processed, so choosing the right pricing model is crucial.

GCP offers two primary options: on-demand pricing, which charges based on data scanned, and BigQuery Editions, which allows customers to commit to a fixed number of slots. For organizations with predictable workloads, committing to BigQuery Editions can lead to significant savings. GCP’s Slot Recommender can further guide decisions by analyzing usage patterns and recommending the optimal pricing model.

Storage costs in BigQuery can also be optimized by transitioning from logical storage models to physical storage, which offers superior compression capabilities. Although physical storage has a higher per-unit cost, its ability to reduce the overall volume of stored data often results in lower expenses. Organizations can use GCP’s cost analysis queries to determine whether this transition would be beneficial for their specific use case.

In addition to selecting the right pricing model, enforcing query guardrails is an effective way to control costs. Limiting ad hoc queries, setting query size caps, and monitoring query efficiency help ensure that data analysis efforts remain cost-effective. Combined with GCP’s native tools and external platforms, these strategies create a robust framework for optimizing BigQuery spending.

Leverage committed use, sustained use discounts, and preemptible VMs

GCP provides powerful cost-saving options for compute resources, including Sustained Use Discounts (SUDs), Committed Use Discounts (CUDs), and Preemptible VMs, each tailored to different usage patterns and workloads.

Sustained Use Discounts (SUDs) automatically reward consistent usage throughout the month without requiring any upfront commitment. For instance, if an application consistently uses a specific virtual machine (VM) configuration, the associated discount increases incrementally as usage time grows. This hands-free approach is ideal for workloads with steady demand, offering savings of up to 30%.

Committed Use Discounts (CUDs), on the other hand, require organizations to commit to a specific level of usage for one or three years. This allows predictable workloads to benefit from up to 57% cost savings over standard on-demand pricing. Advanced tools can analyze historical usage data to determine the most efficient commitment levels, helping organizations lock in savings while avoiding overcommitting to resources they may not fully utilize.

Preemptible VMs, now part of GCP’s Spot VMs offering, provide a unique way to cut costs by leveraging unused cloud capacity at steep discounts—up to 91% compared to regular pricing. These temporary VMs can be interrupted by GCP when capacity is needed elsewhere, making them best suited for fault-tolerant workloads like batch processing, data analysis, or media transcoding. For instance, a video transcoding workload with no strict deadline could run entirely on Preemptible VMs, significantly reducing costs without sacrificing performance.

Each of these discounts can be strategically combined to optimize compute expenses across different workload types. Advanced platforms further enhance this process by integrating AI-driven insights, enabling organizations to determine the right mix of discounts and optimize costs dynamically.

Control logging costs with retention and exclusion policies

Cloud logging is essential for monitoring and debugging, but it can become a hidden cost driver if not carefully managed. GCP allows users to configure exclusion filters that prevent specific log entries from being ingested, stored, or routed unnecessarily. For instance, excluding debug-level logs for non-critical applications can significantly reduce storage expenses without compromising operational insight.

Another effective strategy is adjusting log retention periods. GCP provides 30 days of free log storage, but extended retention periods incur additional costs. Organizations can configure retention policies to automatically purge older logs that are no longer relevant, ensuring storage usage remains lean and focused on essential data.

| Factor | Impact on Cost | Optimization Tip |

| Log Volume | Higher volume = higher costs | Use exclusion filters to eliminate unnecessary logs. |

| Retention Period | Longer retention = higher storage costs | Adjust retention to compliance requirements only. |

| Destination Type | More sinks = increased cost | Avoid unnecessary routing to multiple log sinks. |

Combining these measures with automated monitoring tools helps organizations maintain transparency over their logging costs. These tools provide granular insights into log usage, enabling teams to refine their exclusion filters and retention settings as needs evolve.

Cultivate a Cost-Conscious Culture

Creating a cost-conscious culture ensures that GCP cost optimization becomes a shared responsibility across the organization rather than solely the domain of IT or finance. This cultural shift starts with establishing governance boards that provide centralized oversight of cloud resources. These boards, often composed of cross-functional teams, enforce best practices, monitor spending patterns across departments, and hold teams accountable for their cloud usage. By aligning governance with strategic business goals, organizations can drive a coordinated effort to optimize costs while maintaining operational efficiency.

Transparency is another cornerstone of a cost-conscious culture. Sharing cost data and actionable insights with all stakeholders fosters collaboration and reduces inefficiencies. For instance, when departments have visibility into their spending patterns, they can identify opportunities for optimization, reduce duplication of resources, and align their budgets with organizational priorities. Encouraging open discussions about cloud usage not only empowers teams to take ownership of their cloud expenses but also reinforces the importance of financial discipline throughout the organization. This collective accountability ensures that GCP cost optimization is not just a one-time effort but an ongoing practice embedded in the organization’s operational DNA.

Addressing Common Challenges in GCP Cost Optimization

While GCP provides robust tools and services for cloud management, navigating its cost optimization landscape comes with unique challenges. Understanding and addressing these obstacles is key to maximizing value from your GCP investment.

Complex Pricing Models

GCP offers a range of pricing structures for its diverse suite of services, from compute and storage to data analytics and machine learning. However, the variety of options, combined with the dynamic nature of cloud workloads, can make it difficult for organizations to predict and control expenses. For instance, factors such as instance type, data transfer fees, and regional variations in pricing often contribute to unexpected costs.

To address this, organizations must invest in advanced cost management tools that consolidate and analyze billing data across services. These tools provide unified dashboards that break down expenses by service, region, and resource type, offering granular insights into cost drivers. By integrating these insights into financial planning, teams can identify cost anomalies, such as underutilized resources or unexpected spikes in spending, and take proactive measures to align usage with budget goals. Additionally, leveraging GCP’s built-in recommendations for rightsizing and discount programs like committed use discounts (CUDs) further simplifies cost management by guiding teams toward smarter resource allocation.

Multi-Cloud Management

Organizations operating across multiple cloud providers face the added complexity of managing disparate billing systems, pricing models, and reporting formats. This lack of standardization can obscure the true cost of workloads, making it difficult to allocate expenses accurately or identify areas for optimization. For example, when a single application relies on services from multiple clouds, tracking and optimizing the associated costs often requires significant manual effort.

The FinOps Foundation’s FOCUS (FinOps Open Cost and Usage Specification) offers a valuable framework for standardizing billing data across providers, enabling organizations to track multi-cloud expenses in a cohesive manner. Coupled with centralized reporting tools, FOCUS helps streamline financial oversight by consolidating cost data into a single, unified view. This approach not only improves visibility but also simplifies forecasting and resource planning.

Beyond FOCUS, platforms like CloudBolt play a pivotal role by integrating multi-cloud data into actionable insights. These tools enable teams to automate tasks like anomaly detection, cost allocation, and budget tracking, reducing manual intervention and fostering greater financial discipline. By adopting such solutions, organizations can ensure that their multi-cloud strategy remains both flexible and cost-efficient, empowering them to focus on innovation without sacrificing financial control.

Final thoughts

Effective GCP cost optimization is vital for organizations leveraging Google Cloud. While GCP’s native tools offer a strong foundation, adopting advanced strategies and automation platforms can drive unparalleled efficiency.

Explore how CloudBolt’s Augmented FinOps capabilities empower teams to close the insight-to-action gap and achieve peak performance on GCP.

Schedule a Demo to discover how to optimize your GCP environment and maximize your cloud investments.

FAQs for GCP Cost Optimization

How can I estimate the cost savings potential of GCP optimization?

Estimating savings begins with understanding your current cloud usage patterns. Use GCP’s native cost management tools, like the Billing Reports and Recommendations Hub, to identify underutilized resources and assess potential savings from actions like rightsizing or migrating to cheaper storage tiers. Third-party FinOps platforms can provide more granular insights by analyzing historical data and generating detailed forecasts that highlight cost-saving opportunities across your entire GCP environment.

What are the most common mistakes that drive up GCP costs?

Common mistakes include overprovisioning compute resources, using default storage classes for all data (e.g., Standard tier), and failing to implement retention policies for logs or data. Other issues arise from neglecting to power off idle instances, not leveraging CUDs for predictable workloads, and lacking cross-team visibility into resource consumption. Addressing these through automation, governance policies, and periodic audits can prevent unnecessary expenses.

Is multi-cloud management compatible with GCP cost optimization?

Yes, but it requires careful coordination. GCP’s tools provide visibility into GCP-specific usage, while multi-cloud cost management platforms aggregate billing data across providers. These platforms use standards like FOCUS (FinOps Open Cost and Usage Specification) to normalize data and provide a unified view of spending, enabling cost optimization even in multi-cloud setups.

How does automation support GCP cost optimization?

Automation plays a pivotal role in simplifying GCP cost management. It minimizes manual intervention by handling repetitive and time-consuming tasks like rightsizing virtual machines, scheduling resources, and managing storage tiers. For instance, automated power-scheduling can shut down idle dev/test environments during off-hours, cutting unnecessary expenses without disrupting operations.

Moreover, automation tools leverage real-time insights and AI-driven recommendations to enhance decision-making. By continuously analyzing usage patterns, these tools can detect anomalies, suggest resource adjustments, and execute optimizations dynamically. This ensures that cost-saving opportunities are not missed, even in complex, multi-service environments.

How do GCP’s cost-saving features compare to external tools?

GCP offers robust built-in cost optimization features, including resource recommendations, sustained use discounts, and lifecycle policies for storage management. However, external platforms add value by offering enhanced capabilities, such as cross-cloud visibility, advanced automation, and more granular analytics. These tools empower organizations to implement proactive, AI-driven strategies that maximize savings while improving operational efficiency.

Related Blogs

The New FinOps Paradigm: Maximizing Cloud ROI

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research In a world where 98% of enterprises are embracing FinOps,…

Ready to Run Webinar: Achieving Automation Maturity in FinOps

Automation has become essential to keeping up with today’s fast-paced cloud environment. Manual FinOps processes create bottlenecks, delay decisions, and…