Google Cloud Platform (GCP) is a compelling offering for enterprises that require a scalable, reliable, cost-effective, and performance-oriented hosting solution for their workloads and applications. Backed by a robust infrastructure, GCP provides a range of services for compute, storage, network, big data, analytics, and artificial intelligence applications to meet customers’ varying computing requirements.

Like any other cloud provider, GCP has a pay-as-you-go pricing model that allows customers to pay only for the resources they consume. However, these dynamic costs can scale rapidly if they are not strategically monitored and optimized. GCP provides several native built-in features, like cloud billing reports and cloud quotas, to help manage costs. However, these utilities leverage historical trends and patterns, followed by manual intervention to optimize costs.

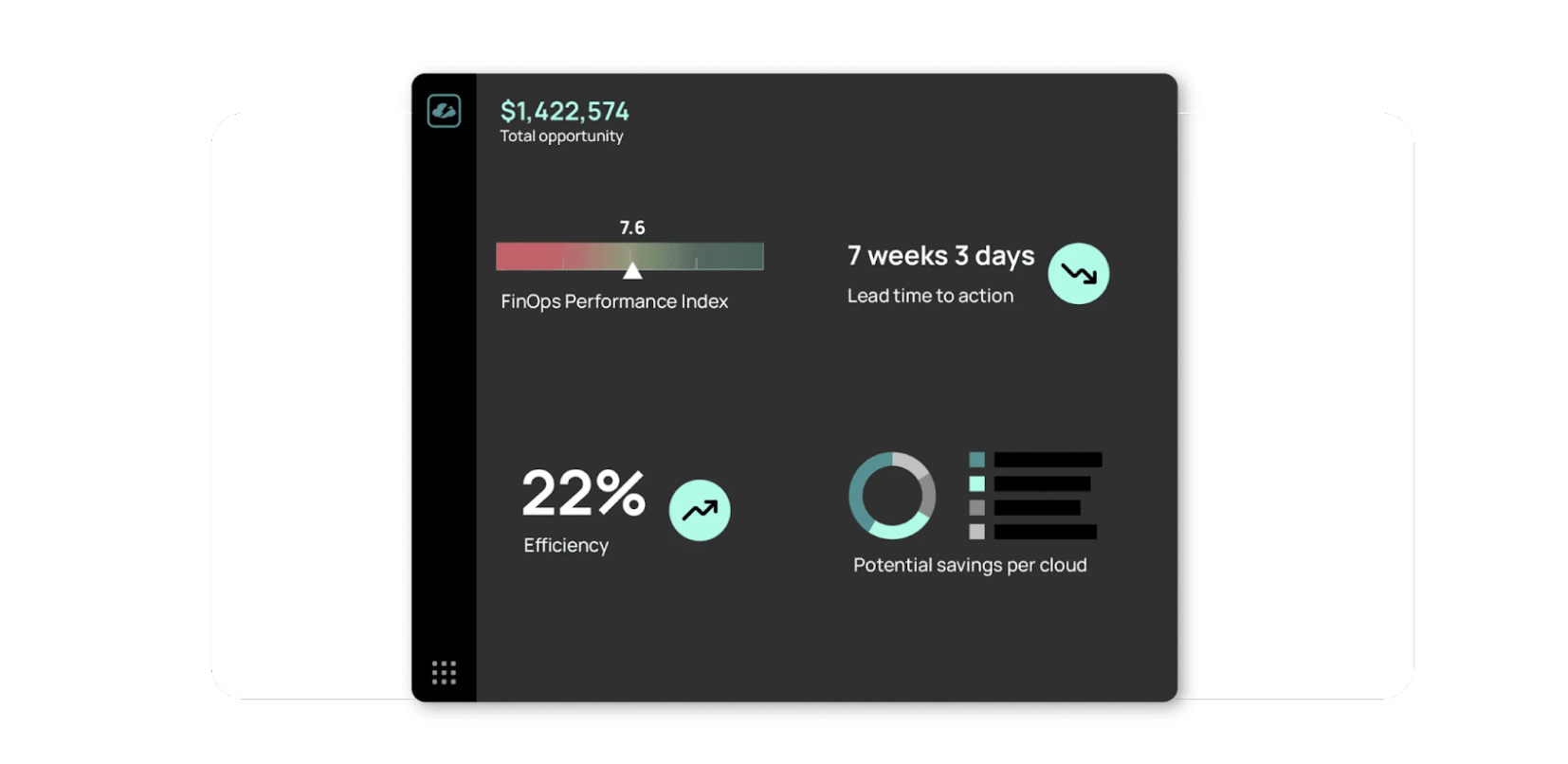

Augmented FinOps is a more agile way of optimizing GCP spending, allowing enterprises to proactively apply intelligent automation and orchestration backed by real-time AI/ML-generated insights. This approach allows enterprises to simplify processes and accelerate outcomes without stifling innovation. End-users are also empowered to move more quickly and shorten the time from insight to action.

This article delves into the best practices for GCP cost optimization and highlights how augmented FinOps can complement native GCP solutions to help customers manage their GCP costs.

GCP cost optimization best practices

| Best Practices | Description |

| Manage compute costs | Reduce overprovisioned resources by identifying and cleaning up idle GCE instances and attached disks. Leverage custom machine types and rightsize VMs to match resource configuration with application requirements. |

| Optimize storage costs | Migrate cloud storage data to cheaper storage tiers based on usage and access patterns. Configure object lifecycle policies to automate movement between storage classes based on predefined criteria. |

| Reduce BigQuery costs | Carefully evaluate and select from the available pricing models based on analysis needs, and leverage committed slots for workloads with predictable requirements. |

| Leverage committed use discounts | Leverage long-term commitment discounts offered by GCP, like committed use discounts (CUDs) and sustained use discounts (SUDs), to optimize compute costs and bring predictability to cloud budgets and forecasting. |

| Control logging costs | Configure exclusion filters and retention settings to prevent log entries from being routed to the log sink’s destination and stored there. |

| Optimize database spend | Identify and rightsize overallocated instances to match workload requirements with data configuration. Purchase CUDs for workloads with predictable usage to achieve significant savings. |

| Leverage external tools | Augment cloud-native solutions with CloudBolt’s advanced FinOps offering that provides unrivaled visibility and self-service capabilities. |

Manage compute costs

Compute services like GCE (compute engine) and GKE (Kubernetes engine) are among the biggest contributors to cloud bills on GCP. Enterprises need to ensure that they are only paying for resources that are being consumed and to avoid underutilization and overprovisioning of infrastructure.

These optimization objectives can be achieved in the following ways:

- Clean up idle resources: Deallocating resources that are not being utilized is one of the easiest ways to reduce GCP cloud spend. Organizations can generate immediate savings and reduce technical debt by identifying and removing consistently underutilized instances (based on CPU and memory metrics). The GCP Recommender provides customers with a list of potentially idle VMs based on a historical metrics analysis.

- Rightsize instances: Rightsizing allows organizations to match resource configuration to workload requirements and to optimize the hourly rate incurred for compute instances. GCP also allows customers to specify custom instance configurations, which allows the selection of desired processing power and memory resources while not being constrained by SKU specifications on offer by the CSP.

- Power-schedule resources: Users can specify custom on/off schedules for their instances and reduce costs by only paying for resources when required. For example, dev/test instances might only be run during business hours on weekdays and switched off during nights and weekends, generating direct savings by reducing the GCE usage associated with these instances.

Organizations can accelerate the process from insight to realization by leveraging CloudBolt, which offers an interactive chatbot that uses natural language to deliver real-time analysis, actionable insights, and strategic recommendations to optimize spending.

An example prompt could be: “Which GCE instances are good candidates for termination due to low utilization?” The platform also allows for tailored rightsizing analysis by setting specific CPU and memory utilization parameters.

Optimize storage costs

Google cloud storage provides customers with a robust and scalable service capable of handling ever-increasing quantities of data and billions of objects. Storage costs are generally driven by two major factors: the quantity of data and the rate per unit of quantity. There are a couple of ways that organizations can save on their storage costs.

Selecting the right storage class

GCP offers different storage classes—standard, nearline, coldline, and archival—for different use cases, with varying per-unit storage costs. Since the standard tier is the default, engineering teams frequently provision the most expensive tier for all buckets, leading to increased costs. By understanding the usage and access patterns of objects, teams can make an informed decision on moving data to cheaper tiers without affecting performance or retrieval latency.

For instance, migrating backup data from the standard to the nearline or coldline tiers lowers storage costs significantly without affecting performance due to the infrequent need to access backup data.

| Storage class | Minimum storage duration | Retrieval fees |

| Standard storage | None | None |

| Nearline storage | 30 days | Yes |

| Coldline storage | 90 days | Yes |

| Archive storage | 365 days | Yes |

Configuring object lifecycle policies

GCP also allows customers to configure custom lifecycle policies based on a predefined set of conditions. This allows for the automatic movement of data to a cheaper storage tier if the defined criteria are met.

For example, a policy that moves data from standard to nearline if it has not been accessed in the last 90 days would lower storage costs and reduce the need for manual intervention by engineers.

Reduce BigQuery costs

BigQuery is a GCP enterprise data warehouse solution that allows customers to process large quantities of data and perform real-time analytics for blazing-fast insights. The BigQuery cost for any project can primarily be segregated into two components:

- Analysis costs: Costs related to query processing

- Storage costs: Costs related to active and long-term data storage

To optimize BigQuery costs, both cost components need to be understood and controlled.

Optimizing analysis costs

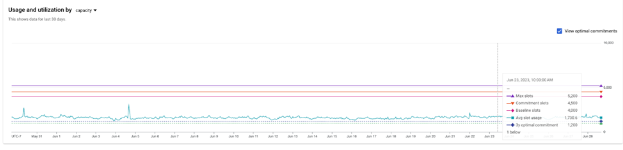

BigQuery has two different pricing models for query processing: on-demand (pricing based on the amount of data scanned) and BQ editions (where customers can commit to a fixed number of slots and leverage BQ autoscaling to cater to fluctuating demand). Organizations can analyze their slot usage patterns over time to make informed decisions regarding the best pricing models for their workloads.

GCP also offers a slot recommender utility that can guide customers on the decision to move from an on-demand pricing model to BQ editions or vice versa. Enterprises can further control costs by establishing policies and guardrails around query processing to limit query costs.

Optimizing storage costs

Organizations can save on their BQ storage costs by transitioning from a logical model to a physical model of storage. The physical storage model offers superior compression capabilities, which allow customers to reduce costs even at a higher per-unit cost (physical storage is twice as expensive as logical storage). GCP provides simple queries to conduct an audit for customers to analyze and evaluate the cost of storage in the two pricing models and make the appropriate decision to generate maximum savings.

Leverage committed use discounts

GCP offers several cost-saving options to customers, which provide discounts in exchange for steady usage of GCP resources.

Sustained use discounts (SUDs)

These are automatic discounts provided by GCP if customers use specific virtual machines for an extended period each month. These discounts are applicable to various compute resources like vCPU and memory. They are applied automatically on the incremental usage above a threshold limit, which varies based on instance type, region, and other factors.

Committed use discounts (CUDs)

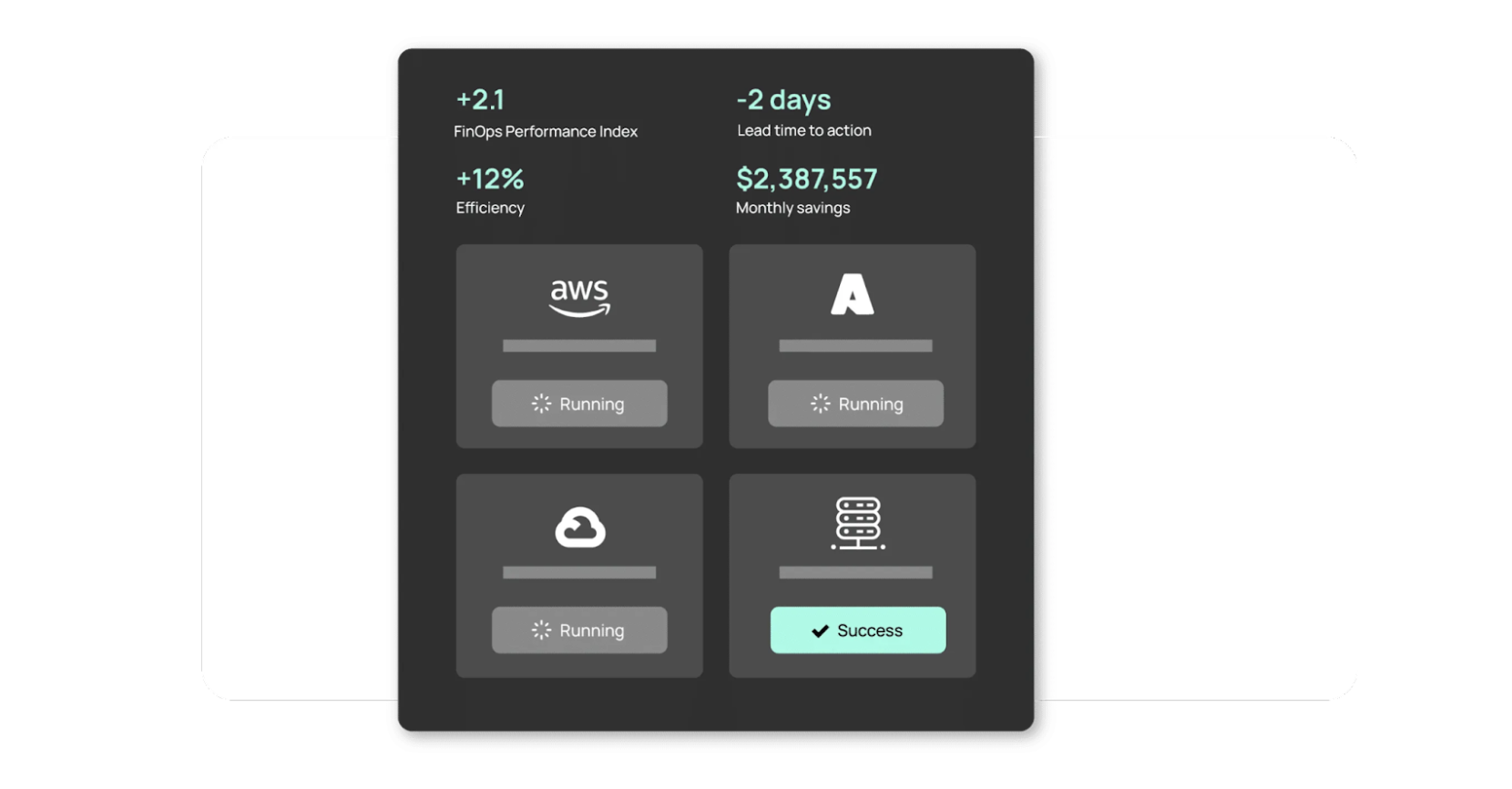

Through CUDs, organizations can get discounted pricing by committing to fixed usage of a specific instance type for a one-year or three-year duration. CUDs are easy to configure and don’t require any upfront payments.CloudBolt’s advanced modeling tools allow for comprehensive commitment analysis, accommodating various terms (one year or three years) and resource types. This approach ensures that commitment purchases are well informed, strategic, and aligned with GCP environment needs.

Control logging costs

Cloud logging costs are relatively difficult to track and can scale up quickly depending on the volume of logged data and the configured retention period of that data. Here are some of the ways to control logging costs.

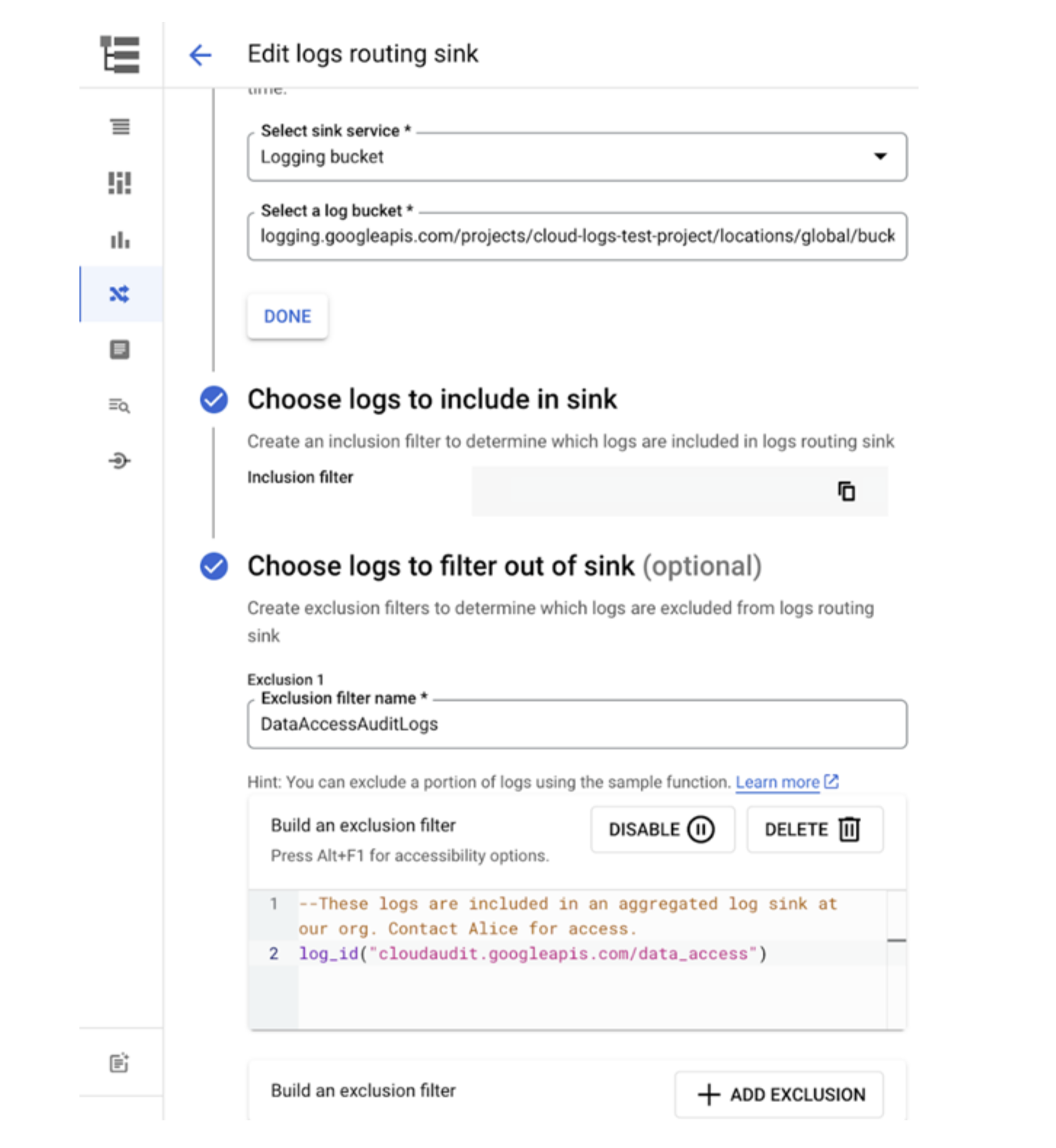

Configuring exclusion filters

Exclusion filters allow users to exclude certain logs from being ingested into GCP cloud logs. Users can set exclusion filters while creating a new log sink or modify an existing log sink to exclude certain logs based on specified conditions.

For instance, filters can be set to exclude logs from certain services or for certain projects/resources based on labels or resource names.

Adjusting the retention period

GCP doesn’t charge projects for storing log data for up to 30 days, but as teams start storing these logs for more than 30 days, the storage costs start to increase. To control these costs, it is important to configure log retention policies to purge logs that are not required for more than a specified duration.

Optimize database spend

Cloud SQL makes it easy for engineers to create new database instances for production workloads or test prototypes and MVPs. Since customers are not billed based on query processing but rather the runtime of instances in Cloud SQL, idle or underutilized instances can significantly contribute to increased Cloud SQL spending.

Here are some best practices to optimize Cloud SQL instances:

- Rightsize or remove underutilized instances: Rightsizing or removing idle Cloud SQL instances can immediately decrease cloud costs. GCP provides customers with idle instance recommendations through its Recommendation Hub.

- Power-schedule instances: Configuring custom on/off schedules for instances based on the use case can also serve as an efficient strategy for cost optimization. Through the use of a combination of cloud functions, cloud pub/sub, and the cloud scheduler, users can, for example, schedule dev instances to turn off during non-business hours and weekends, generating significant savings.

- Leverage committed use discounts: Leveraging one-year or three-year CUDs for stable workloads can generate significant cost savings.

Leverage external tools

Organizations operating in a hybrid multi-cloud setup typically struggle to manage costs across different environments and cloud services. The native tooling offered by GCP, though adequate, is not comprehensive and lacks the advanced features required by optimization teams.

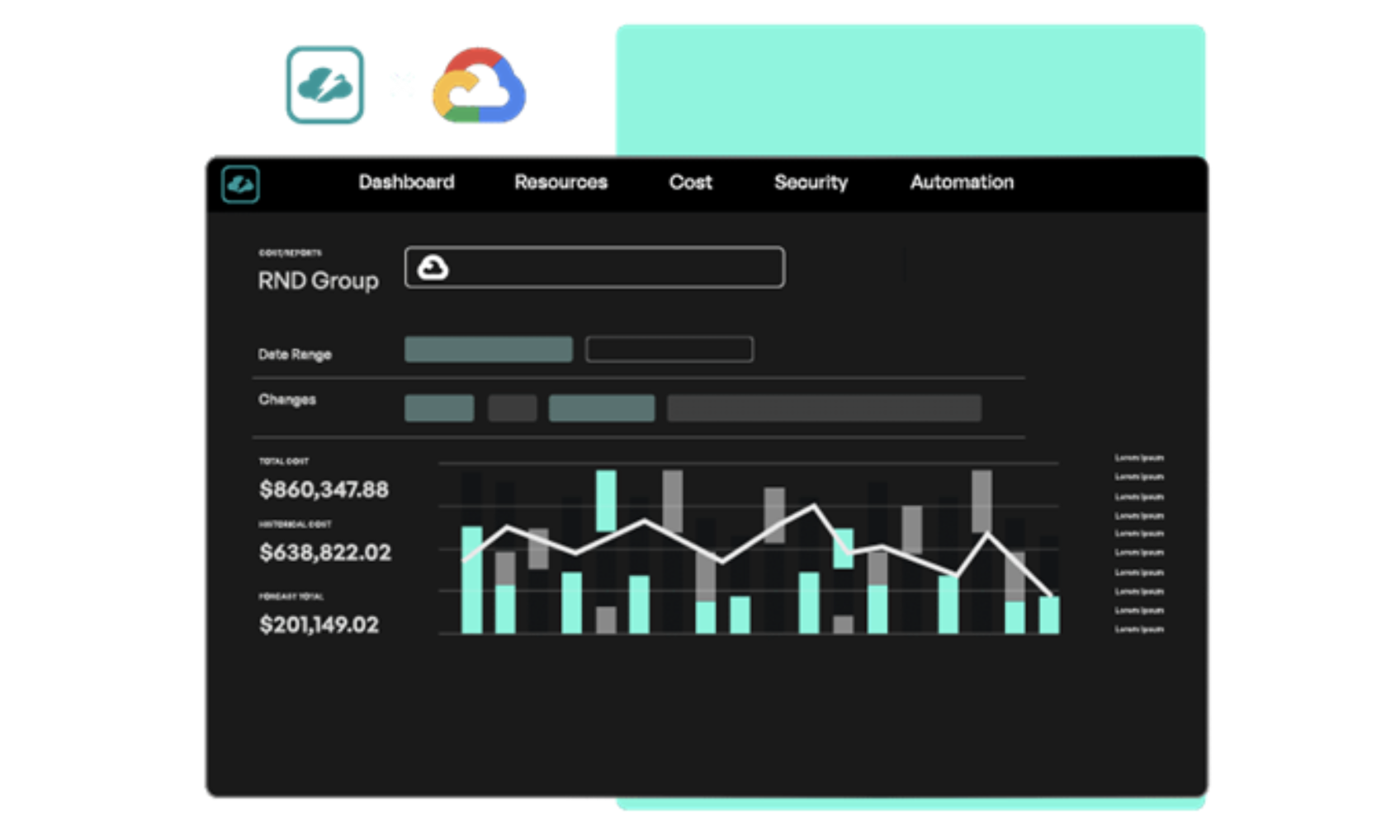

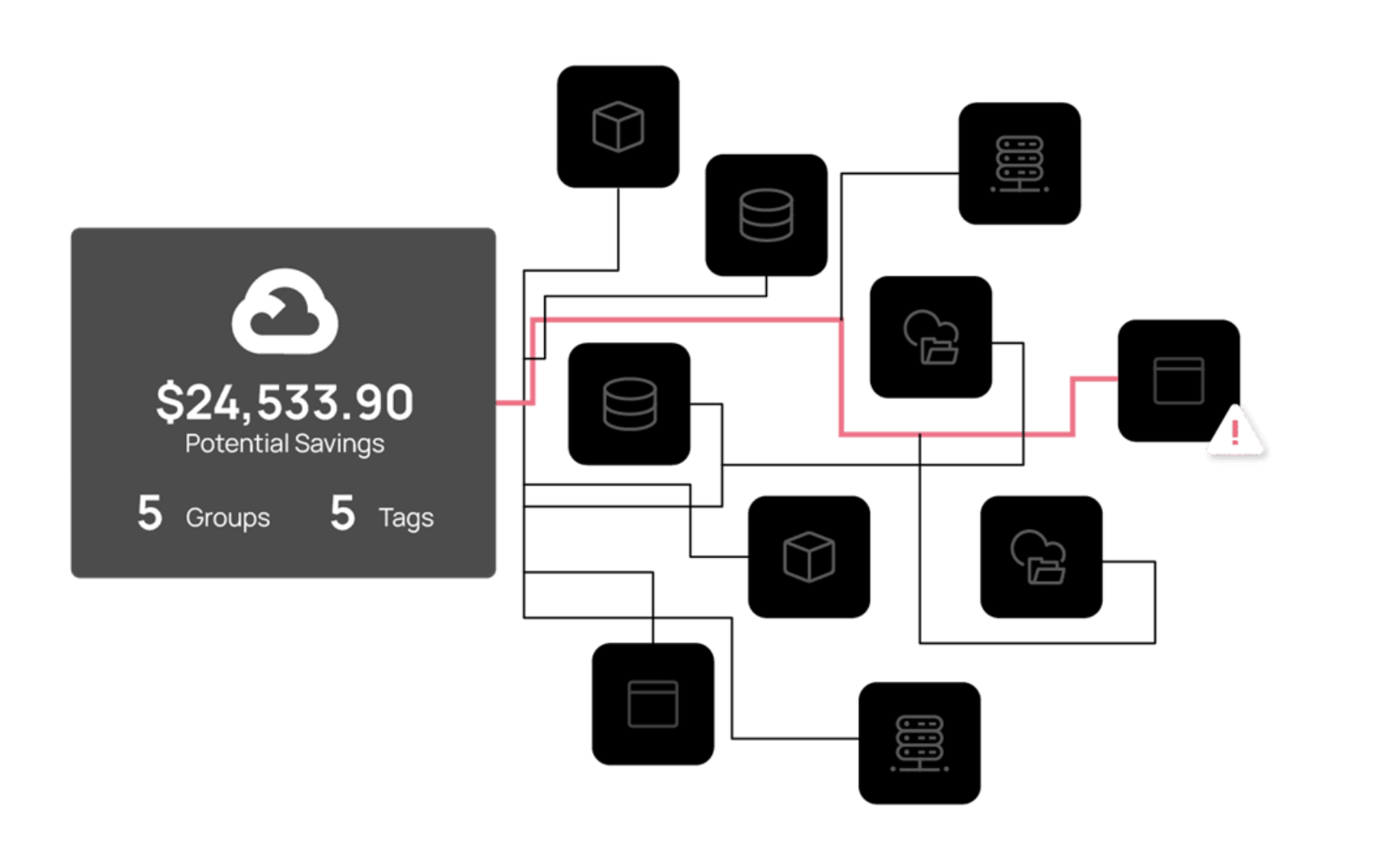

External tooling options like CloudBolt can accelerate the GCP cost optimization journey for organizations by providing fully featured cloud management solutions that support a range of cloud operation use cases through self-service, automation, and financial management. CloudBolt can help augment GCP optimization journeys for businesses in a number of ways.

Managing and tracking opportunities at scale

CloudBolt continuously analyzes historical cost data and resource usage patterns to identify optimization opportunities, guiding users toward the optimal path for remediation. You can manually or automatically assign tasks to their appropriate owners and integrate seamlessly with tools you already use in your workflow.

Wide-ranging automation capabilities

Achieve precise and efficient resource management. Automation capabilities span backups, rightsizing, and power scheduling, with filters tailored for service types, groups, labels, and regions, aligning with diverse GCP cloud infrastructure requirements.

Custom logic

Set specific CPU and memory utilization metrics parameters to align GCE optimization recommendations with your financial strategy. Instance class options allow you to rightsize within a preferred instance class to ensure smooth transitions and unwavering performance for your resources.

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research

Final thoughts

Google Cloud offers many native tools for cloud cost optimization at no extra cost, like cloud billing reports, cost table reports, and its Recommendations Hub. While this serves as an excellent starting point for organizations in their cost optimization journeys, there is an inherent need to adopt a more proactive approach to cloud cost management and optimization.

Augmented FinOps is a next-generation FinOps strategy that extends traditional FinOps principles with an approach focused on the use of advanced AI/ML algorithms, intelligent automation, and continuous integration and deployment.

With its advanced FinOps automation capabilities, CloudBolt closes the insight-to-action gap by providing practical automation that seamlessly optimizes resource waste for your GCP estate and helps you achieve peak cloud efficiency. It lets users finally enjoy complete cloud lifecycle optimization by rapidly and confidently turning insights into actions that maximize cloud value and simplify FinOps.

Related Blogs

The New FinOps Paradigm: Maximizing Cloud ROI

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research In a world where 98% of enterprises are embracing FinOps,…

FinOps Evolved: Key Insights from Day One of FinOps X Europe 2024

The FinOps Foundation’s flagship conference has kicked off in Europe, and it’s set to be a remarkable event. Attendees familiar…