Focus on FinOps: The alignment paradox

This study sets out to find out what, if anything, is still standing between current-state FinOps and achieving continuous return-on-investment in the cloud, and if there are any gaps between FinOps teams and the stakeholders they serve.

Real-World Insights

- How much progress have companies made in creating a culture of FinOps and alignment across teams.

- Are there any lingering gaps between FinOps teams and DevOps/Engineering teams?

- What nirvana should look like for a company with alignment across all teams.

- Recommendations for unlocking the full potential of FinOps.

Fill out the form and download the full report now to access comprehensive insights and strategic guidance on navigating the evolving landscape of FinOps.

Let’s Chat

“The location of service deployment doesn’t matter to us. We’re only interested in what’s most practical and efficient in terms of cost, security, and so on.” This stance, echoed by a Senior VP of Infrastructure at last week’s CloudBolt customer event, resonates with a significant number of IT leaders. For a variety of organizations, the deployment location of a new business service, either on-premise or in the cloud, is inconsequential.

However, in reality, the majority of developers, commercial off-the-shelf software vendors (COTS), and third-party service providers tend to lean towards the public cloud when given a choice. Often, the simplest answer is also the most fitting – the cloud simply offers a superior experience! It provides a user-friendly, feature-packed platform with a myriad of service options. Nonetheless, it’s not always the ideal choice for every business, and it can bring about certain disadvantages.

To prevent the public cloud from gaining an unfair advantage, tech leaders need to set limitations on its usage. One straightforward solution is creating a checklist or questionnaire to help evaluate if the public cloud is indeed the best choice. A well-constructed pre-deployment questionnaire proves instrumental in comprehending the necessity and advantages of utilizing the public cloud.

Here are some essential questions we suggest including in your assessment to confirm whether your workload requires the benefits of the public cloud, versus using your in-house data center:

1. Business Goals

- What are the main business objectives driving this project or service? How will the business quantify ROI?

- How is your current infrastructure impeding these business objectives?

Field Notes: Each proposed service should flaunt a rock-solid ‘return on investment’ model and crystal-clear criteria for business value. While these often connect to revenue, don’t shy away from linking them to customer or employee experience metrics like NPS or CSAT.

2. Performance Requirements

- What does the proposed architecture look like? Are there components that need technologies not available on-premise? If so, is it possible to refactor the application for on-premise operation?

- What are the performance requirements (latency, throughput, etc.)? Can the public cloud offerings exclusively meet or exceed these?

- What performance testing has taken place to confirm the proposed requirements?

Field Notes: Beware, a COTS vendor or service provider might peddle specifications loftier than needed or introduce shiny new technology as performance insurance. Uncertain? Roll up your sleeves and use your own testing methods to confirm the performance claims.

3. Cost Considerations

- Have you carried out a detailed cost analysis comparing maintaining your existing data center infrastructure to transitioning to the public cloud?

- Are there financial considerations or constraints to take into account, such as licensing or others?

Field Notes: It’s alarming how many organizations gloss over the total cost of ownership. Remember, the decision-making process must account for all relevant factors: resource & hosting, maintenance & operations, licensing, commitments, and more.

4. Workload Characteristics

- Are there possibilities to host parts of the workload on-premise, like storage or compute?

- How predictable is your workload? Could a pay-as-you-go model be more cost-effective than maintaining capacity during peak loads?

- What’s the nature of the applications/data being hosted? Are they stateless, stateful, or a combination?

- Do you foresee a need to quickly scale your resources up, down, or out?

- How rapidly must you be able to respond to changes in demand? Why?

Field Notes: Keep in mind, cloud bursting stands ready for workloads expecting predictable capacity surges. If your workload consistently experiences seasonal capacity spikes, hosting on-premise could still be the go-to choice most of the time.

5. Data Security and Compliance

- What are your data security needs? Can the public cloud offerings meet these with the current configuration?

- Are there regulatory or compliance requirements (such as GDPR, HIPAA, PCI DSS) influencing your decision?

Field Notes: Sure, on-premise data centers often get the limelight for easier customization of security measures and compliance protocols. However, as industry standards and regulatory obligations morph, many security teams find public cloud adaptability refreshing.

6. Disaster Recovery and Business Continuity

- What’s your current disaster recovery and business continuity plan?

- Would a public cloud solution enhance your resilience and recovery time objectives (RTO) and recovery point objectives (RPO)?

Field Notes: Considering the public cloud? Know that options to power down unused resources can trim down disaster recovery infrastructure costs. But beware of the catch – slower failover and potential business continuity disruption. If your business demands a constantly operational DR infrastructure, the public cloud can turn pricey, and a fixed-cost service might be the smarter move.

7. Integration and Interoperability

- How will your existing systems merge with a public cloud solution?

- What’s your strategy for cloud data management, including data integration, data migration, and interoperability?

Field Notes: Embarking on a multi-cloud, hybrid-cloud journey can feel like navigating a labyrinth. With different cloud providers using unique APIs, management tools, security models, service offerings, pricing structures, and so forth, complexity is inevitable. Keep your compass handy! Many companies should employ enterprise architect leaders to be the savvy navigators capable of ensuring that all these puzzle pieces not only fit together but also align seamlessly with the overall strategic direction.

8. Staff Skillset

- Does your team possess the necessary skills to manage and optimize the architectural components of the deployment?

- If not, what’s your strategy for training your team or recruiting new members with the required skills?

Field Notes: Silos between development and operation teams often lead to the use of new services or SKUs where internal capabilities are missing. Stay sharp – maintain an updated “fly / no-fly list” of approved cloud offerings. Pair this with a robust proof-of-concept process to pragmatically test, learn, and operate new technologies.

9. Exit Strategy

- How long does the workload and its components need to stay on the public cloud?

- If you decide to transition the workload to a different platform or bring it back to your in-house data center in the future, what steps would be necessary?

Field Notes: We might dub this list a pre-deployment framework, but don’t box it in. Use it as a routine spot check. Regular audits of both on-premise and cloud solutions remain critical to keeping your service deployment strategy on its toes.

Choosing between on-premise and public cloud deployment is not a one-size-fits-all decision. It requires a thoughtful analysis of various aspects, including business goals, performance requirements, cost considerations, workload characteristics, security and compliance, disaster recovery, integration, staff skills, and exit strategies. Ultimately, the decision should align with your organizational objectives and optimize resource usage, security, and performance. So, don’t be daunted by the intricacies of the questionnaire – remember, armed with even a simple checklist can go a long way – something is always better than nothing!

Ready to learn more? See how you can get the best out of a hybrid cloud environment.

Despite companies spending billions on cloud security each year, cyberattacks and data breaches continue to rise. Companies invest in security monitoring tools to solve their security challenges. Even still, failure to integrate security and compliance requirements at the deployment stage increases vulnerability and leaves security teams with the impossible task of cleaning up the aftermath. While there’s no magic solution, many companies overlook the importance of deployment standardization in reducing the attack surface proactively.

The Growing Problem

Securing your environment isn’t rocket science. With proper planning and the right tools, companies can mitigate most attack avenues. The key is to take a proactive stance and place less emphasis on traditional “seek and find” methodologies. However, this balance becomes harder to achieve as a company grows. Setting up the correct security standards and procedures is feasible in a small environment, but as a company expands, it faces more employees, disparate teams, clouds, and tools to manage. The more complex the technology landscape becomes, the harder it is to ensure that every employee is compliant and every workload adheres to necessary guidelines. Each team that sets up its own instances, VMs, and tools can become an attack vector, making the attack surface grow and increasingly challenging to manage at scale.

Integrating End-to-End Standardization

Consider your company. How well do you know your company’s security policies and settings? Do all your developers know? What about your IT team or end-users? Establishing standards and guidelines is just the first step toward a better security posture. The best way to ensure compliance is to keep security out of the hands of users and build automatic guardrails whenever possible.

Here are three simple steps to creating a more secure and standardized cloud security posture:

- Establish Approved Deployment Patterns – The success of security operations lies in the definition (or lack thereof). Even mature companies struggle with tooling and process islands. Standard compliance auditing for in-place workloads is beneficial but not sufficient on its own. It’s essential to ensure that enterprise architecture teams (or similar) define and require approved deployment patterns that factor in cross-functional technology requirements, especially security-related ones. Companies that do this well have clearly established definitions and standards and maintain processes and gates to ensure that only workloads that meet all the criteria make it into production. However, this is easier said than done, as patterns are only as good as their adoption.

- Leverage Automation to Ensure Compliance – The best governance must be implicit, happening automatically as part of the process. With the right tool, you can automate most of the deployment process using approved build architectures, making the delivery of cloud resources (VMs, workloads, XaaS, etc.) easily repeatable, and controllable. For example, CloudBolt blueprints allow you to build guardrails that keep each deployment secure while giving teams the necessary flexibility. Instead of relying on each user to know every setting and follow every protocol, security can be built into each blueprint, guaranteeing adherence to standards. No more depending on individual teams or users to be security experts to deploy resources.

- Know What’s in Your Environment – You can’t protect what you don’t know. While centralizing the deployment process reduces the number of unknown resources, it’s crucial to have total visibility of your technology landscape. While public clouds all have their approaches to this, oversight of complex hybrid cloud estates is tricky. Luckily, there are plenty of options on the market to help track all details of your cloud configuration. With the right choice in place, your team can better:

-

- Detect Anomalies: With complete visibility, it’s easier to identify deviations from established security standards and take action to resolve them.

- Monitor Compliance: Organizations can use the centralized dashboard to monitor their cloud environment for compliance with security controls, policies, regulations, and industry standards.

- Manage Threats: A complete overview of the cloud environment helps organizations detect and respond to potential threats more efficiently, minimizing the impact of security incidents.

By having the proper visibility, you can proactively address any issues that may arise instead of just reacting to emergencies.

Key Takeaways

As businesses become increasingly vulnerable to cyberattacks, standardization is crucial in securing a company’s cloud environment. A comprehensive approach to security includes establishing approved deployment patterns, utilizing automation, and having a centralized visibility approach. Companies that achieve this can reduce the attack surface, improve compliance with security standards, and maintain a secure cloud environment.

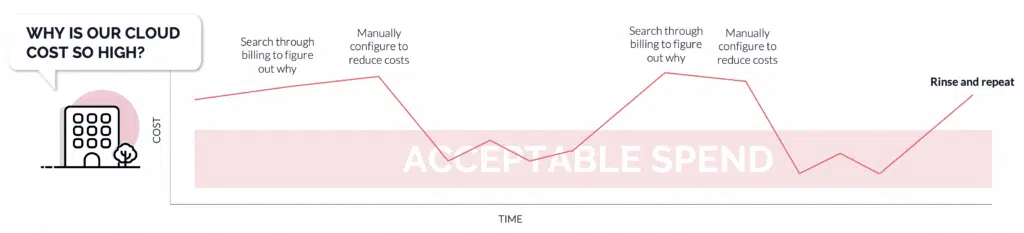

A cycle of spikes and fixes

Is your company constantly faced with unexpected and rising cloud costs? Does your company go through a seemingly endless cycle of dealing with spikes in costs by manually poring through every cloud bill across numerous clouds and teams to find the source of the unexpected cost to get the problem under control only to have it happen again every few months or so?

There is a better, more proactive way to optimize cloud spending. A method that doesn’t require throwing accountants and IT teams to manually go through pages and pages of seemingly unreconcilable cloud billing to find where they can potentially squeeze some savings out of an already tight cloud budget.

The root cause of the problem

The real reason companies find themselves having to repeatedly deal with unexpected multi-cloud spending is that they are set up only to be reactive to it. Companies do their best early on to plan their usage and budgets so they can maximize their savings from reserved instances or savings accounts, but once set up, they don’t have the visibility or the right tools to keep multi-cloud costs continuously optimized. When a spike in usage inevitably happens, companies find themselves scrambling to bring it back down to acceptable levels in a very labor-heavy manual process.

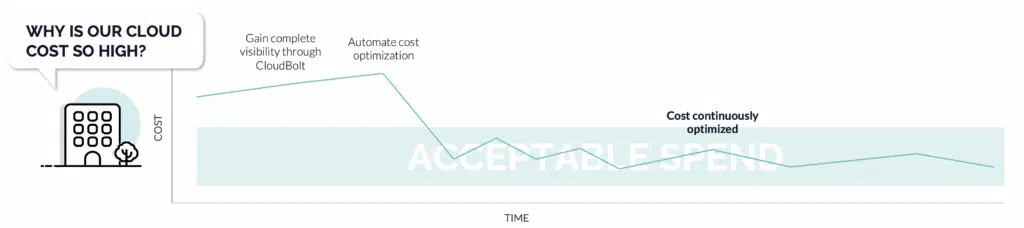

How to optimize cloud costs and keep them low

With an ideal solution in place, spikes in cloud spending shouldn’t be happening in the first place. A solid FinOps framework is a great place to start, but you should also have the visibility to understand where the inefficiencies are coming from as well as the right tools to ensure that cost optimization doesn’t become a manual process.

Start with FinOps – The first step in optimizing cloud costs is to create an environment where engineering, finance, and business teams all understand that they need to take ownership for their cloud usage and collaborate to ensure they get maximum business value from their investments. A good FinOps strategy should help keep unexpected costs to a minimum, but a cloud management tool that takes the FinOps framework into account should be considered as well.

Gain better Visibility – You can’t optimize what you can’t see, and visibility becomes harder as a company scales. The more clouds, tools, and teams you deal with, the harder it is to track where the spikes in costs might be coming from. Ideally, you want a tool that sits above all clouds and gives you a single-pane-of-glass visibility for your cloud spend across your entire company. You should not only know when spend is rising and where they’re coming from but also be able to easily identify where any potential savings might be.

Build-in guardrails and tagging – Instead of relying on each team to follow every guideline on compliance, spending, and tagging for every instance, the ideal solution is to have them built-in to the blueprint at the onset, removing the need for any human input at all. This ensures that all instances are properly configured to match company guidelines, and everything is properly tagged for consistent tracking and discoverability.

Automate, automate, automate – The key to staying proactive is to automate cost saving measures: You should have a tool to set quotas and spending limits for each instance; VMs should be on an automated power schedule that turns the VMs on or off based on usage to optimize spend; and each VM should have expiration dates, so owners must take direct action to keep it active before it wastes precious resources doing nothing. You should also be automatically notified before cloud spending becomes a problem, so you can take proper action as soon as possible.

With proper visibility and automations in place, you can ensure that cloud spend always stays optimized without constantly having to dive into your cloud bills to keep cloud spending under control.

See how CloudBolt can keep your cloud costs optimized

Today marks the next phase in CloudBolt’s evolution. Craig Hinkley has joined the company as the new Chief Executive Officer and will build on the company’s solid foundation to further CloudBolt’s growth.

Hinkley has a deep track record of success in leading software businesses and driving rapid expansion while also accelerating innovation, scale, and value. Most recently, Hinkley was CEO of White Hat Security, where he led a technological and business transformation that enabled the company to realize significant value for its employees and shareholders. Now he looks to bring further focus to CloudBolt and its offerings.

“Craig’s experience in rapid expansion of mid-stage technology companies and successful exits through a relentless focus on customers, company, and culture will enable CloudBolt to further accelerate its trajectory of success,” said Thomas Krane, Managing Director at Insight Partners – the Private Equity company that owns CloudBolt. “We are more optomistic than ever regarding CloudBolt’s opportunity to impact the market.”

With a particular passion for customer-centricity, Hinkley will concentrate on helping CloudBolt to become even more valuable and essential to companies leveraging hybrid cloud through deeper innovation, faster development cycles, and quantifiable customer success metrics.

“I am proud and honored to take on the CEO role at CloudBolt as it enters its next chapter,” said Hinkley. “CloudBolt’s potential is enormous, and I am eager to see how quickly we can continue to evolve as a solution provider. The company enjoys keen advantages in terms of advanced Cloud Financial Management, Cloud Automation and Orchestration, and Governance capabilities. My mission is to bring particular focus to our areas of greatest opportunity and help the organization fully capitalize on them. I could not be more excited about the road ahead and what this team can achieve together for our customers.”

Hinkley replaces Jeff Kukowski, CloudBolt’s former CEO, who despite COVID shutdowns and economic uncertainties managed to lead the company to growth. Kukowski is participating in ensuring a smooth transition and will then pursue other opportunities.

“I am particularly grateful to Jeff for all his contributions in getting CloudBolt to where it is today,” Hinkley said. “He has been a wonderful resource in helping me to quickly ramp up and hit the ground running. He is a true professional and I wish him all the best in his future endeavors.”

Craig Hinkley lives near Seattle, WA with his wife of 28 years, and enjoys hiking in the great outdoors in the Pacific Northwest.